I have some radioactive decay data, which has uncertainties in both x and y. The graph itself is all good to go, but I need to plot the exponential decay curve and return the report from the fitting, to find the half-life, and reduced chi^2.

The code for the graph is:

fig, ax = plt.subplots(figsize=(14, 8))

ax.errorbar(ts, amps, xerr=2, yerr=sqrt(amps), fmt="ko-", capsize = 5, capthick= 2, elinewidth=3, markersize=5)

plt.xlabel('Time /s', fontsize=14)

plt.ylabel('Counts Recorded in the Previous 15 seconds', fontsize=16)

plt.title("Decay curve of P-31 by $β^+$ emission", fontsize=16)

The model I am using (which admittedly I'm not confident on my programming here) is:

def expdecay(x, t, A):

return A*exp(-x/t)

decayresult = emodel.fit(amps, x=ts, t=150, A=140)

ax.plot(ts, decayresult.best_fit, 'r-', label='best fit')

print(decayresult.fit_report())

But I don't think this takes account for the uncertainties, just, plots them on the graph. I would like it to fit the exponential decay curve having taken account for the uncertainties and return the half life (t in this case) and reduced chi^2 with their respective uncertainties.

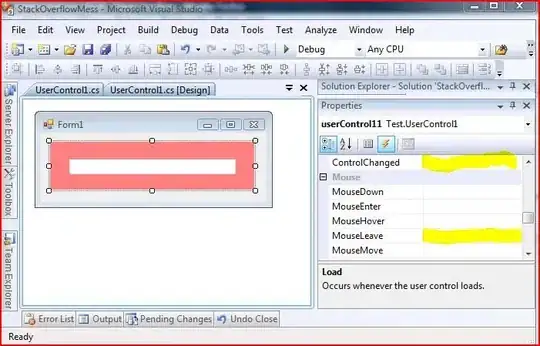

Aiming for something like the picture below, but accounting for the uncertainties in the fitting:

Using the weight=1/sqrt(amps) suggestion, and the full data set, I get:

Which is, I imagine, the best fit (reduced chi^s of 3.89) from this data possible. I was hoping it'd give me t=150s, but hey, that one is on the experiment. Thanks for the help all.