Our AR device is based on a camera with pretty strong optical zoom. We measure the distortion of this camera using classical camera-calibration tools (checkerboards), both through OpenCV and the GML Camera Calibration tools.

At higher zoom levels (I'll use 249 out of 255 as an example) we measure the following camera parameters at full HD resolution (1920x1080):

fx = 24545.4316 fy = 24628.5469 cx = 924.3162 cy = 440.2694

For the radial and tangential distortion we measured 4 values:

k1 = 5.423406 k2 = -2964.24243 p1 = 0.004201721 p2 = 0.0162647516

We are not sure how to interpret (read: implement) those extremely large values for k1 and k2. Using OpenCV's classic "undistort" operation to rectify the image using these values seems to work well. Unfortunately this is (much) too slow for realtime usage.

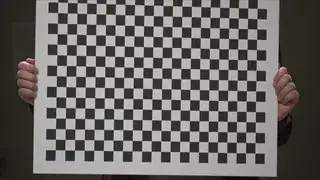

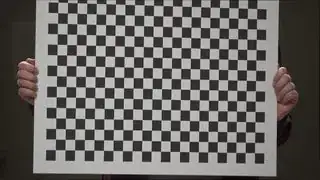

The thumbnails below look similar, clicking them will display the full size images where you can spot the difference:

That's why we want to take the opposite aproach: leave the camera footage be distorted and apply a similar distortion to our 3D scene using shaders. Following the OpenCV documentation and this accepted answer in particular, the distorted position for a corner point (0, 0) would be

// To relative coordinates

double x = (point.X - cx) / fx; // -960 / 24545 = -0.03911

double y = (point.Y - cy) / fy; // -540 / 24628 = -0.02193

double r2 = x*x + y*y; // 0.002010

// Radial distortion

// -0.03911 * (1 + 5.423406 * 0.002010 + -2964.24243 * 0.002010 * 0.002010) = -0.039067

double xDistort = x * (1 + k1 * r2 + k2 * r2 * r2);

// -0.02193 * (1 + 5.423406 * 0.002010 + -2964.24243 * 0.002010 * 0.002010) = -0.021906

double yDistort = y * (1 + k1 * r2 + k2 * r2 * r2);

// Tangential distortion

... left out for brevity

// Back to absolute coordinates.

xDistort = xDistort * fx + cx; // -0.039067 * 24545.4316 + 924.3162 = -34.6002 !!!

yDistort = yDistort * fy + cy; // -0.021906 * 24628.5469 + 440.2694 = = -99.2435 !!!

These large pixel displacements (34 and 100 pixels at the upper left corner) seem overly warped and do not correspond with the undistorted image OpenCV generates.

So the specific question is: what is wrong with the way we interpreted the values we measured, and what should the correct code for distortion be?