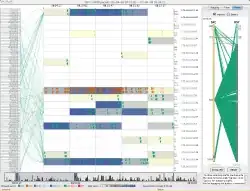

Numpy stores its ndarrays as contiguous blocks of memory. Each element is stored in a sequential manner every n bytes after the previous.

(images referenced from this excellent SO post)

So if your 3D array looks like this -

np.arange(0,16).reshape(2,2,4)

#array([[[ 0, 1, 2, 3],

# [ 4, 5, 6, 7]],

#

# [[ 8, 9, 10, 11],

# [12, 13, 14, 15]]])

Then in memory its stores as -

When retrieving an element (or a block of elements), NumPy calculates how many strides (of 8 bytes each) it needs to traverse to get the next element in that direction/axis. So, for the above example, for axis=2 it has to traverse 8 bytes (depending on the datatype) but for axis=1 it has to traverse 8*4 bytes, and axis=0 it needs 8*8 bytes.

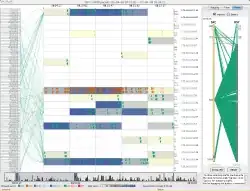

With this in mind, let's understand what dimensions are in numpy.

arr2d = np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

arr3d = np.array([[[1, 2, 3], [4, 5, 6]], [[7, 8, 9], [10, 11, 12]]])

print(arr2d.shape, arr3d.shape)

(3, 3) (2, 2, 3)

These can be considered a 2D matrix and a 3D tensor respectively. Here is an intuitive diagram to show how this would look like.

A 1D numpy array with (ndims=1) is a vector, 2D is a matrix, and 3D is a rank 2 tensor which can be imagined as a cube. The number of values it can store is equal to - array.shape[0] * array.shape[1] * array.shape[2] which in your second case is 2*2*3.

Vector (n,) -> (axis0,) #elements

Matrix (m,n) -> (axis0, axis1) #rows, columns

Tensor2 (l,m,n) -> (axis0, axis1, axis2)

Tensor3 (l,m,n,o) -> (axis0, axis1, axis2, axis3)