There is a similar question (not that detailed and no exact solution). I want to create a single panorama image from video frames. And for that, I need to get minimum non-sequential video frames at first. A demo video file is uploaded here.

What I Need

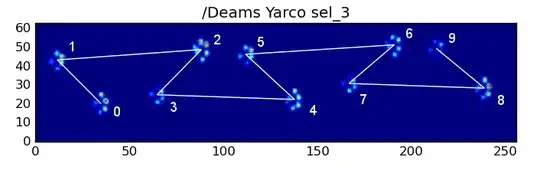

A mechanism that can produce not-only non-sequential video frames but also in such a way that can be used to create a panorama image. A sample is given below. As we can see to create a panorama image, all the input samples must contain minimum overlap regions to each other otherwise it can not be done.

So, if I have the following video frame's order

A, A, A, B, B, B, B, C, C, A, A, C, C, C, B, B, B ...

To create a panorama image, I need to get something as follows - reduced sequential frames (or adjacent frames) but with minimum overlapping.

[overlap] [overlap] [overlap] [overlap] [overlap]

A, A,B, B,C, C,A, A,C, C,B, ...

What I've Tried and Stuck

A demo video clip is given above. To get non-sequential video frames, I primarily rely on ffmpeg software.

Trial 1 Ref.

ffmpeg -i check.mp4 -vf mpdecimate,setpts=N/FRAME_RATE/TB -map 0:v out.mp4

After that, on the out.mp4, I applied slice the video frames using opencv

import cv2, os

from pathlib import Path

vframe_dir = Path("vid_frames/")

vframe_dir.mkdir(parents=True, exist_ok=True)

vidcap = cv2.VideoCapture('out.mp4')

success,image = vidcap.read()

count = 0

while success:

cv2.imwrite(f"{vframe_dir}/frame%d.jpg" % count, image)

success,image = vidcap.read()

count += 1

Next, I rotated these saved images horizontally (as my video is a vertical view).

vframe_dir = Path("out/")

vframe_dir.mkdir(parents=True, exist_ok=True)

vframe_dir_rot = Path("vframe_dir_rot/")

vframe_dir_rot.mkdir(parents=True, exist_ok=True)

for i, each_img in tqdm(enumerate(os.listdir(vframe_dir))):

image = cv2.imread(f"{vframe_dir}/{each_img}")[:, :, ::-1] # Read (with BGRtoRGB)

image = cv2.rotate(image,cv2.cv2.ROTATE_180)

image = cv2.rotate(image,cv2.ROTATE_90_CLOCKWISE)

cv2.imwrite(f"{vframe_dir_rot}/{each_img}", image[:, :, ::-1]) # Save (with RGBtoBGR)

The output is ok for this method (with ffmpeg) but inappropriate for creating the panorama image. Because it didn't give some overlapping frames sequentially in the results. Thus panorama can't be generated.

Trail 2 - Ref

ffmpeg -i check.mp4 -vf decimate=cycle=2,setpts=N/FRAME_RATE/TB -map 0:v out.mp4

didn't work at all.

Trail 3

ffmpeg -i check.mp4 -ss 0 -qscale 0 -f image2 -r 1 out/images%5d.png

No luck either. However, I've found this last ffmpeg command was close by far but wasn't enough. Comparatively to others, this gave me a small amount of non-duplicate frames (good) but the bad thing is still do not need frames, and I kinda manually pick some desired frames, and then the opecv stitching algorithm works. So, after picking some frames and rotating (as mentioned before):

stitcher = cv2.Stitcher.create()

status, pano = stitcher.stitch(images) # images: manually picked video frames -_-

Update

After some trials, I am kinda adopting the non-programming solution. But would love to see an efficient programmatic approach.

On the given demo video, I used Adobe products (premiere pro and photoshop) to do this task, video instruction. But the issue was, I kind of took all video frames at first (without dropping to any frames and that will computationally cost further) via premier and use photoshop to stitching them (according to the youtube video instruction). It was too heavy for these editor tools and didn't look better way but the output was better than anything until now. Though I took few (400+ frames) video frames only out of 1200+.

Here are some big challenges. The original video clips have some conditions though, and it's too serious. Unlike the given demo video clips:

- It's not straight forward, i.e. camera shaking

- Lighting condition, i.e. causes different visual look at the same spot

- Cameral flickering or banding

This scenario is not included in the given demo video. And this brings additional and heavy challenges to create panorama images from such videos. Even with the non-programming way (using adobe tools) I couldn't make it any good.

However, for now, all I'm interest to get a panorama image from the given demo video which is without the above condition. But I would love to know any comment or suggestion on that.