tl;dr

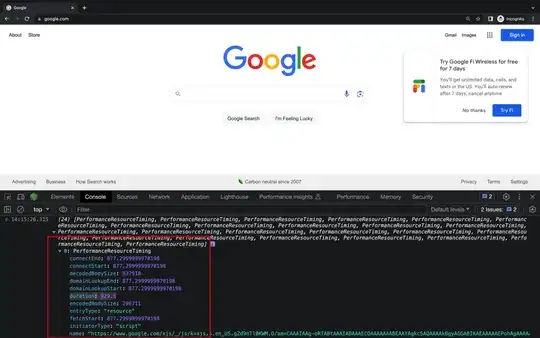

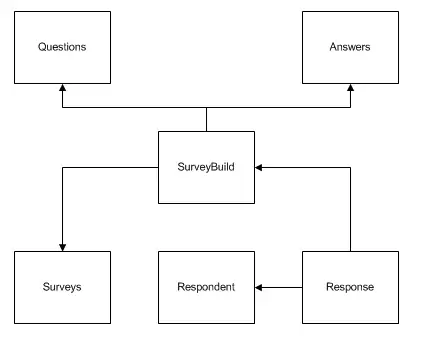

The page makes additional requests to pick up that info. Those additional requests rely on combinations of ids; an id pulled from the option element value attribute, of each option within Division dropdown, in tandem with an id pulled from the option element value attribute of each option within the District dropdown.

You can make an initial request to get all the Division dropdown ids:

divisions <- options_df("#division option:nth-child(n+2)", "division")

nth-child(n+2) is used to exclude the initial 'select' option.

This returns a dataframe with the initial divisionIDs and friendly division names.

Those ids can then be used to retrieve the associated districtIDs (the options which become available in the second dropdown after making your selection in the first):

districts <- pmap_dfr(

list(divisions$divisionID),

~ {

df_districts <- districts_from_updated_session(.x, "district") %>%

mutate(

divisionID = .x

)

return(df_districts)

}

)

This returns a dataframe mapping the divisionID to all the associated districtIDs, as well as the friendly district names:

By including the divisionID in both dataframes I can inner-join them:

div_district <- dplyr::inner_join(divisions, districts, by = "divisionID", copy = FALSE)

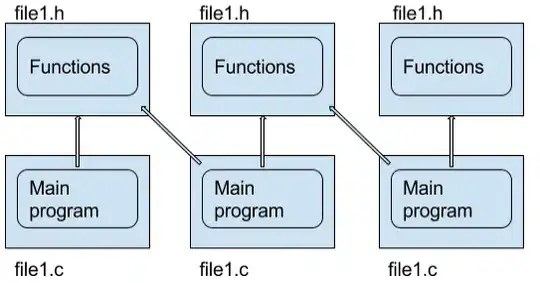

Up until now, I have been using a session object for the efficiency of tcp re-use. Unfortunately, I couldn't find anything in the documentation covering how to update an already open session allowing for sending a new POST request with dynamic body argument. Instead, I leveraged furrr::future_map to try and gain some efficiencies through parallel processing:

df <- div_district %>%

mutate(json = furrr::future_map(divisionID, .f = get_covid_data, districtID))

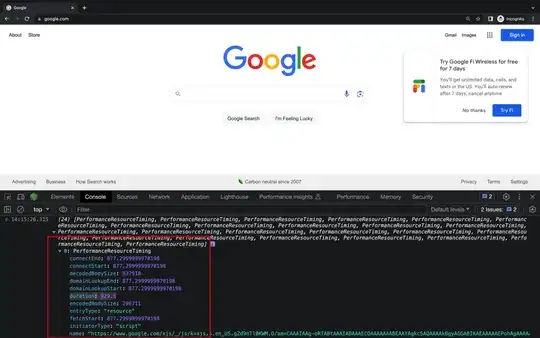

To get the final covid numbers, via get_covid_data(), I leverage some perhaps odd behaviour of the server, in that I could make a GET, passing divisionID and districtID within the body, then regex out part of the jquery datatables scripting, string clean that into a json valid string, then read that into a json object stored in the json column of the final dataframe.

Inside of the json column

R:

library(httr)

#> Warning: package 'httr' was built under R version 4.0.3

library(rvest)

#> Loading required package: xml2

#> Warning: package 'xml2' was built under R version 4.0.3

library(tidyverse)

#> Warning: package 'tibble' was built under R version 4.0.3

#> Warning: package 'forcats' was built under R version 4.0.3

library(jsonlite)

#> Warning: package 'jsonlite' was built under R version 4.0.3

#>

#> Attaching package: 'jsonlite'

#> The following object is masked from 'package:purrr':

#>

#> flatten

library(furrr)

#> Warning: package 'furrr' was built under R version 4.0.3

#> Loading required package: future

#> Warning: package 'future' was built under R version 4.0.3

## to clean out everything before a run

# rm(list = ls(all = TRUE))

# invisible(lapply(paste0('package:', names(sessionInfo()$otherPkgs)), detach, character.only=TRUE, unload=TRUE)) # https://stackoverflow.com/a/39235076 @mmfrgmpds

#returns value:text for options e.g. divisions/districts (dropdown)

options_df <- function(css_selector, level) {

nodes <- session %>% html_nodes(css_selector)

options <- nodes %>% map_df(~ c(html_attr(., "value"), html_text(.)) %>%

set_names(paste0(level, "ID"), level))

return(options)

}

#returns districts associated with division

districts_from_updated_session <- function(division_id, level) {

session <- jump_to(session, paste0("http://103.247.238.92/webportal/pages/ajaxDataDistrictDHIS2Dashboard.php?division_id=", division_id))

return(options_df("#district option:nth-child(n+2)", level))

}

# returns json object housing latest 12 month covid numbers by divisionID + districtID pairing

get_covid_data <- function(divisionID, districtID) {

headers <- c(

"user-agent" = "Mozilla/5.0",

"if-modified-since" = "Wed, 08 Jul 2020 00:00:00 GMT" # to mitigate for caching

)

data <- list("division" = divisionID, "district" = districtID, "period" = "LAST_12_MONTH", "Submit" = "Search")

r <- httr::GET(url = "http://103.247.238.92/webportal/pages/covid19.php", httr::add_headers(.headers = headers), body = data)

data <- stringr::str_match(content(r, "text"), "DataTable\\((\\[[\\s\\S]+\\])\\)")[1, 2] %>% #clean up extracted string so can be parsed as valid json

gsub("role", '"role"', .) %>%

gsub("'", '"', .) %>%

gsub(",\\s+\\]", "]", .) %>%

str_squish() %>%

jsonlite::parse_json()

return(data)

}

url <- "http://103.247.238.92/webportal/pages/covid19.php"

headers <- c("User-Agent" = "Mozilla/4.0", "Referer" = "http://103.247.238.92/webportal/pages/covid19.php")

session <- html_session(url, httr::add_headers(.headers = headers)) #for tcp re-use

divisions <- options_df("#division option:nth-child(n+2)", "division") #nth-child(n+2) to exclude initial 'select' option

districts <- pmap_dfr(

list(divisions$divisionID),

~ {

df <- districts_from_updated_session(.x, "district") %>%

mutate(

divisionID = .x

)

return(df)

}

)

div_district <- dplyr::inner_join(divisions, districts, by = "divisionID", copy = FALSE)

no_cores <- future::availableCores() - 1

future::plan(future::multisession, workers = no_cores)

df <- div_district %>%

mutate(json = future_map(divisionID, .f = get_covid_data, districtID))

Created on 2021-03-04 by the reprex package (v0.3.0)

Py

import requests, re, ast

from bs4 import BeautifulSoup as bs

def options_dict(soup, css_selector):

options = {i.text:i['value'] for i in soup.select(css_selector) if i['value']}

return options

def covid_numbers(text):

covid_data = p.findall(text)[0]

covid_data = re.sub(r'\n\s+', '', covid_data.replace("role","'role'"))

covid_data = ast.literal_eval(covid_data)

return covid_data

url = 'http://103.247.238.92/webportal/pages/covid19.php'

regions = {}

result = {}

p = re.compile(r'DataTable\((\[[\s\S]+\])\)')

with requests.Session() as s:

s.headers = {'User-Agent': 'Mozilla/5.0', 'Referer': 'http://103.247.238.92/webportal/pages/covid19.php'}

soup = bs(s.get(url).content, 'lxml')

divisions = options_dict(soup, '#division option')

for k,v in divisions.items():

r = s.get(f'http://103.247.238.92/webportal/pages/ajaxDataDistrictDHIS2Dashboard.php?division_id={v}')

soup = bs(r.content, 'lxml')

districts = options_dict(soup, '#district option')

regions[k] = districts

s.headers = {'User-Agent': 'Mozilla/5.0','if-modified-since': 'Wed, 08 Jul 2020 22:27:07 GMT'}

for k,v in divisions.items():

result[k] = {}

for k2,v2 in regions.items():

data = {'division': k2, 'district': v2, 'period': 'LAST_12_MONTH', 'Submit': 'Search'}

r = s.get('http://103.247.238.92/webportal/pages/covid19.php', data=data)

result[k][k2] = covid_numbers(r.text)