I want to have an input(x) of 5 points and an output of the same size(y). after that, I should fit a curved line into the dataset. finally, I should use matplotlib to draw the curved line and the points in order to show a non-linear regression. I want to fit a single curve to my dataset of 5 points .but it does not seem to work. it is simple but I'm new to sklearn. do you know what is wrong with my code? here is the code:

#here is the dataset of 5 points

x=np.random.normal(size=5)

y=2.2*x-1.1

y=y+np.random.normal(scale=3,size=y.shape)

x=x.reshape(-1,1)

#i use polynomialfeatures module because I want extra dimensions

preproc=PolynomialFeatures(degree=4)

x_poly=preproc.fit_transform(x)

#in this part I want to make 100 points to feed it to a polynomial and after that i can draw a curve .

x_line=np.linspace(-2,2,100)

x_line=x_line.reshape(-1,1)

#at this point i made y_hat inorder to have values of predicted y.

poly_line=PolynomialFeatures(degree=4)

x_feats=poly_line.fit_transform(x_line)

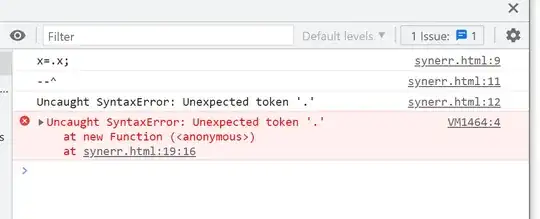

y_hat=LinearRegression().fit(x_feats,y).predict(x_feats)

plt.plot(y_hat,y_line,"r")

plt.plot(x,y,"b.")