The issue

I have arrays which track certain items over time. The items belong to certain categories. I want to calculate the sum by time and category, e.g. to go from a table by time and city to one by time and country.

I have found a couple of ways, but they seem clunky - there must be a better way! Surely I'm not the first one with this issue? Maybe using np.where?

More specifically:

I have a number of numpy arrays of shape (p x i), where p is the period and i is the item I am tracking over time. I then have a separate array of shape i which classifies the items into categories (red, green, yellow, etc.).

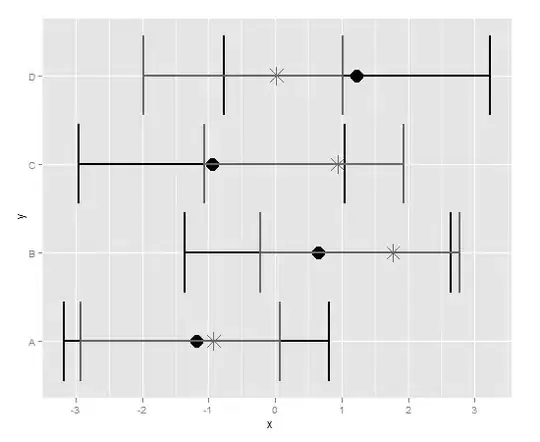

What I want to do is calculate an array of shape (p x number of unique categories) which sums the values of the big array by time and category. In pictures:

I'd need the code to be as efficient as possible as I need to do this multiple times on arrays which can be up to 400 x 1,000,000

What I have tried:

This question covers a number of ways to groupby without resorting to pandas. I like the scipy.ndimage approach, but AFAIK it works on one dimension only.

I have tried a solution with pandas:

- I create a dataframe of shape periods x items

- I unpivot it with

pd.melt(), join the categories and do a crosstab period/categories

I have also tried a set of loops, optimised with numba:

- A first loop creates an array which converts the categories into integers, i.e. the first category in alphabetical order becomes 0, the 2nd 1, etc

- A second loop iterates through all the items, then for each item it iterates through all the periods and sums by category

My findings

- for small arrays, pandas is faster

- for large arrays, numba is better, but it's better to set

parallel = Falsein the numba decorator - for very large arrays, numba with

parallel = Trueshinesparallel = Truemakes use of numba's parallelisation by usingnumba.prangeon the outer loops.

PS I am aware of the pitfalls of premature optimisation etc etc - I am only looking into this because a significant amount of time is spent doing precisely this

The code

import numpy as np

import pandas as pd

import time

import numba

periods = 300

n = int(2000)

categories = np.tile(['red','green','yellow','brown'],n)

my_array = np.random.randint(low = 0, high = 10, size = (periods, len(categories) ))

# my_arrays will have shape (periods x (n * number of categories))

#---- pandas

start = time.time()

df_categories = pd.DataFrame(data = categories).reset_index().rename(columns ={'index':'item',0:'category'})

df = pd.DataFrame(data = my_array)

unpiv = pd.melt(df.reset_index(), id_vars ='index', var_name ='item', value_name ='value').rename( columns = {'index':'time'})

unpiv = pd.merge(unpiv, df_categories, on='item' )

crosstab = pd.crosstab( unpiv['time'], unpiv['category'], values = unpiv['value'], aggfunc='sum' )

print("panda crosstab in:")

print(time.time() - start)

# yep, I know that timeit.timer would have been better, but I was in a hurry :)

print("")

#---- numba

@numba.jit(nopython = True, parallel = True, nogil = True)

def numba_classify(x, categories):

cat_uniq = np.unique(categories)

num_categories = len(cat_uniq)

num_items = x.shape[1]

periods = x.shape[0]

categories_converted = np.zeros(len(categories), dtype = np.int32)

out = np.zeros(( periods, num_categories))

# before running the actual classification, I must convert the categories, which can be strings, to

# the corresponsing number in cat_uniq, e.g. if brown is the first category by alphabetical sorting, then

# brown --> 0, etc

for i in numba.prange(num_items):

for c in range(num_categories):

if categories[i] == cat_uniq[c]:

categories_converted[i] = c

for i in numba.prange(num_items):

for p in range(periods):

out[ p, categories_converted[i] ] += x[p,i]

return out

start = time.time()

numba_out = numba_classify(my_array, categories)

print("numba done in:")

print(time.time() - start)