I have spent the last 4 days trying to resolve this issue. I'm finally at the point that no suggestions online have helped. I'm trying to build a "simple" cordova app that uses face-api.js for mood/expression detection on a real-time local camera feed. The setup is simple: video using iosrtc cordova plugin and then using face-api-js on a canvas overlay to draw face landmarks..etc.

I receive the following error when using safari console (as i'm testing directly on an ios device). [Error] Failed to load resource: The requested URL was not found on this server. (null, line 0) file:///private/var/containers/Bundle/Application/6ADC69C6-C6F1-45D9-B609-0C85B3E47C4A/Angel.app/www/null

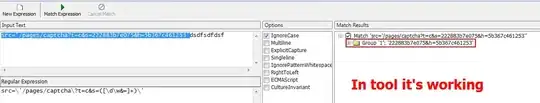

The strange thing is that it seems that the data image resolves after a second or two. You can see that happening here:

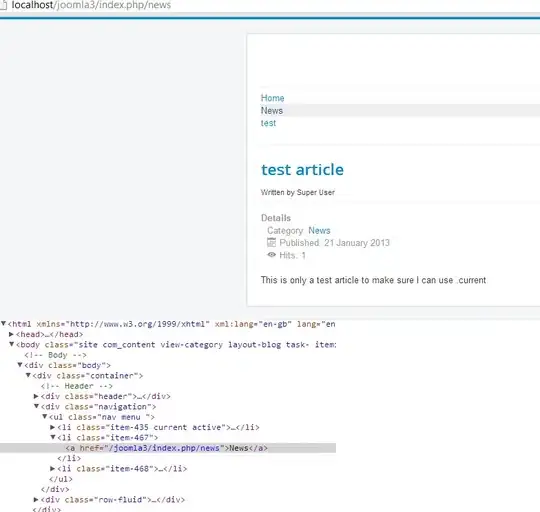

Notice the images start to resolve as my "interval code" repeats:

Let's dive into the code:

HTML

<!DOCTYPE html>

<html>

<head>

<meta

http-equiv="Content-Security-Policy"

content=" media-src * blob: ; script-src * 'self' 'unsafe-inline' 'unsafe-eval'; connect-src * blob: http: https: 'self' 'unsafe-inline' 'unsafe-eval' http://*/* https://*/* https://*:* "

/>

<meta name="format-detection" content="telephone=no" />

<meta name="msapplication-tap-highlight" content="no" />

<meta

name="viewport"

content="width=device-width, initial-scale=1.0, maximum-scale=1.0, user-scalable=no,viewport-fit=cover"

/>

<link rel="stylesheet" type="text/css" href="css/index.css" />

<link

href="https://fonts.googleapis.com/css?family=Roboto+Mono:400,500,700"

rel="stylesheet"

type="text/css"

/>

<link

href="https://fonts.googleapis.com/css?family=Roboto:400,500,700,400italic"

rel="stylesheet"

type="text/css"

/>

<link

href="https://fonts.googleapis.com/icon?family=Material+Icons"

rel="stylesheet"

type="text/css"

/>

<title>Angel</title>

</head>

<body>

<div class="app">

<!--*******************

local video stream

********************-->

<div class="local-stream" id="local-stream"></div>

</div>

<script type="text/javascript" src="cordova.js"></script>

<script type="text/javascript" src="js/faceapi/face-api.js"></script>

<script type="text/javascript" src="js/main.js"></script>

</body>

</html>

JS:

document.addEventListener("deviceready", function () {

(async () => {

let MODEL_URL =

window.cordova.file.applicationDirectory + "www/assets/models/";

let videoContainer = document.getElementById("local-stream");

var localStream, localVideoEl;

localVideoEl = document.createElement("video");

localVideoEl.style.height = "250px";

localVideoEl.style.width = "250px";

localVideoEl.setAttribute("autoplay", "autoplay");

localVideoEl.setAttribute("playsinline", "playsinline");

localVideoEl.setAttribute("id", "video");

localVideoEl.setAttribute("class", "local-video");

videoContainer.appendChild(localVideoEl);

cordova.plugins.iosrtc.registerGlobals();

return navigator.mediaDevices

.getUserMedia({

video: true,

audio: true,

})

.then(function (stream) {

console.log("getUserMedia.stream", stream);

console.log("getUserMedia.stream.getTracks", stream.getTracks());

// Note: Expose for debug

localStream = stream;

// Attach local stream to video element

localVideoEl.srcObject = localStream;

//return localStream;

(async () => {

await faceapi.nets.ssdMobilenetv1.loadFromUri(

"MYHOSTEDMODELS(THIS WORKS FINE)"

);

await faceapi.nets.faceLandmark68Net.loadFromUri(

"MYHOSTEDMODELS(THIS WORKS FINE)"

);

await faceapi.nets.faceExpressionNet.loadFromUri(

"MYHOSTEDMODELS(THIS WORKS FINE)"

);

await faceapi.nets.faceRecognitionNet.loadFromUri(

"MYHOSTEDMODELS(THIS WORKS FINE)"

);

const video = document.getElementById("video");

const canvas = faceapi.createCanvasFromMedia(video);

videoContainer.append(canvas);

const dimensions = {

width: 250,

height: 250,

};

faceapi.matchDimensions(canvas, dimensions);

setInterval(async () => {

let detection = await faceapi

.detectAllFaces(video)

.withFaceLandmarks()

.withFaceExpressions()

.withFaceDescriptors();

let resizedDimensions = faceapi.resizeResults(

detection,

dimensions

);

//DRAW ON CAMERA IF YOU WANT.

canvas.getContext("2d").clearRect(0, 0, 250, 250);

faceapi.draw.drawDetections(canvas, resizedDimensions);

faceapi.draw.drawFaceLandmarks(canvas, resizedDimensions);

faceapi.draw.drawFaceExpressions(canvas, resizedDimensions);

}, 100);

})();

})

.catch(function (err) {

console.log("getUserMedia.error errrrr", err, err.stack);

});

})().then(() => {});

});

PLUGINS (package.json)

"cordova": {

"plugins": {

"cordova-plugin-whitelist": {},

"cordova.plugins.diagnostic": {},

"cordova-plugin-statusbar": {},

"cordova-plugin-background-mode": {},

"cordova-plugin-ipad-multitasking": {},

"cordova-custom-config": {},

"cordova-plugin-file": {},

"cordova-plugin-device": {},

"cordova-plugin-wkwebview-engine": {},

"@globules-io/cordova-plugin-ios-xhr": {},

"cordova-plugin-iosrtc": {

"MANUAL_INIT_AUDIO_DEVICE": "FALSE"

}

},

"platforms": [

"browser",

"ios"

]

},

ONE LAST NOTE: Video plays correctly. It's the face-api that seems to blow the entire thing up. ANY HELP WOULD BE MUCH APPRECIATED!!!!