What is the difference between deploying FastAPI apps dockerized using Uvicorn and Tiangolo's Gunicorn+Uvicorn? And why do my results show that I get a better result when deploying only using Uvicorn than Gunicorn+Uvicorn?

When I searched in Tiangolo's documentation, it says:

You can use Gunicorn to manage Uvicorn and run multiple of these concurrent processes. That way, you get the best of concurrency and parallelism.

From this, can I assume that using this Gunicorn will get a better result?

This is my testing using JMeter. I deployed my script to Google Cloud Run, and this is the result:

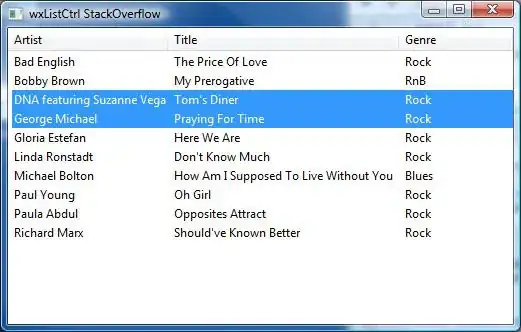

Using Python and Uvicorn:

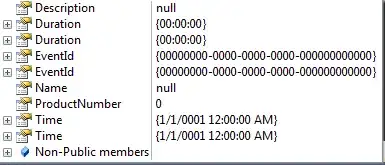

Using Tiangolo's Gunicorn+Uvicorn:

This is my Dockerfile for Python (Uvicorn):

FROM python:3.8-slim-buster

RUN apt-get update --fix-missing

RUN DEBIAN_FRONTEND=noninteractive apt-get install -y libgl1-mesa-dev python3-pip git

RUN mkdir /usr/src/app

WORKDIR /usr/src/app

COPY ./requirements.txt /usr/src/app/requirements.txt

RUN pip3 install -U setuptools

RUN pip3 install --upgrade pip

RUN pip3 install -r ./requirements.txt --use-feature=2020-resolver

COPY . /usr/src/app

CMD ["python3", "/usr/src/app/main.py"]

This is my Dockerfile for Tiangolo's Gunicorn+Uvicorn:

FROM tiangolo/uvicorn-gunicorn-fastapi:python3.8-slim

RUN apt-get update && apt-get install wget gcc -y

RUN mkdir -p /app

WORKDIR /app

COPY ./requirements.txt /app/requirements.txt

RUN python -m pip install --upgrade pip

RUN pip install --no-cache-dir -r /app/requirements.txt

COPY . /app

You can see the error from Tiangolo's Gunicorn+Uvicorn. Is it caused by Gunicorn?

Edited.

So, in my case, I using lazy load method to load my Machine Learning model. This is my class to load the model.

class MyModelPrediction:

# init method or constructor

def __init__(self, brand):

self.brand = brand

# Sample Method

def load_model(self):

pathfile_model = os.path.join("modules", "model/")

brand = self.brand.lower()

top5_brand = ["honda", "toyota", "nissan", "suzuki", "daihatsu"]

if brand not in top5_brand:

brand = "ex_Top5"

with open(pathfile_model + f'{brand}_all_in_one.pkl', 'rb') as file:

brand = joblib.load(file)

else:

with open(pathfile_model + f'{brand}_all_in_one.pkl', 'rb') as file:

brand = joblib.load(file)

return brand

And, this is my endpoint for my API.

@router.post("/predict", response_model=schemas.ResponsePrediction, responses={422: schemas.responses_dict[422], 400: schemas.responses_dict[400], 500: schemas.responses_dict[500]}, tags=["predict"], response_class=ORJSONResponse)

async def detect(

*,

# db: Session = Depends(deps.get_db_api),

car: schemas.Car = Body(...),

customer_id: str = Body(None, title='Customer unique identifier')

) -> Any:

"""

Predict price for used vehicle.\n

"""

global list_detections

try:

start_time = time.time()

brand = car.dict()['brand']

obj = MyModelPrediction(brand)

top5_brand = ["honda", "toyota", "nissan", "suzuki", "daihatsu"]

if brand not in top5_brand:

brand = "non"

if usedcar.price_engine_4w[brand]:

pass

else:

usedcar.price_engine_4w[brand] = obj.load_model()

print("Load success")

elapsed_time = time.time() - start_time

print(usedcar.price_engine_4w)

print("ELAPSED MODEL TIME : ", elapsed_time)

list_detections = await get_data_model(**car.dict())

if list_detections is None:

result_data = None

else:

result_data = schemas.Prediction(**list_detections)

result_data = result_data.dict()

except Exception as e: # noqa

raise HTTPException(

status_code=500,

detail=str(e),

)

else:

if result_data['prediction_price'] == 0:

raise HTTPException(

status_code=400,

detail="The system cannot process your request",

)

else:

result = {

'code': 200,

'message': 'Successfully fetched data',

'data': result_data

}

return schemas.ResponsePrediction(**result)