I cleaned up your code a bit while working on it. The trick is to perform everything step by step.

First locate all the links that you want from the main page using get_main_links.

Then I find all sub sectors using get_sub_sectors. This function has two parts, one that extracts all the sub sectors and a second part that locates all the rankings.

The ranking code is heavily based on:

Note some extra things in my code:

I do not have bare try/except statements, since this would also catch KeyboardInterrupts and many other (useful) errors, but the AttributeError that you would get if there is no element found.

I put all my data in a list of dict. The reason for this is that DataFrame can handle this form directly and I do not need to specify any columns later on.

I am using tqdm to print the progress, because the extra addition takes a bit more time, and it is nice to see that your program is actually doing something. (pip install tqdm).

import logging

from pprint import pprint

from typing import List, Dict

import tqdm

import requests

import pandas as pd

from bs4 import BeautifulSoup

def get_main_links(root: str, soup: BeautifulSoup) -> List[Dict[str, str]]:

"""

Extract the links and secteur tags.

:return (dict)

Dictionary containing the following keys

- href: (str) relative link path from root website

- secteur: (str) sector name

- url: (str) absolute url to get to the page.

"""

data_sectors = []

all_links = soup.find('div', {'class': 'container_rss blocSecteursActivites'}).find_all('a', href=True)

for reference in all_links: # Limit search by using [:2]

data_sectors.append({

'href': reference['href'],

'secteur': reference.text,

'url': f"{root}{reference['href']}"

})

return data_sectors

def get_sub_sectors(root: str, data_sector: Dict[str, str]):

""" Retreive the sub sectors (sous-secteur). """

data_sub_sectors = []

for sector in tqdm.tqdm(data_sector):

result = requests.get(sector['url'])

soup = BeautifulSoup(result.text, 'html.parser')

sub_sectors = _extract_sub_sectors(root, soup)

for sub_sector in tqdm.tqdm(sub_sectors, leave=False): # Limit search by using [:2]

result = requests.get(sub_sector['url'])

soup = BeautifulSoup(result.text, 'html.parser')

rankings = _extract_sub_sector_rankings(sub_sector['url'], soup)

for ranking in rankings:

data_sub_sectors.append({

'names': ranking['name'],

'Secteur': sector['secteur'],

'Sous-Secteur': sub_sector['name'],

'Rankings': ranking['rank']

})

return data_sub_sectors

def _extract_sub_sectors(root: str, soup: BeautifulSoup):

data_sub_sectors = []

try:

sub_sectors = soup.find("div", {"class": "donnees"}).find_all('a', href=True)

except AttributeError:

sub_sectors = soup.find("div", {"class": "listeEntreprises"}).find_all('a', href=True)

for sub_sector in sub_sectors:

data_sub_sectors.append({

'href': sub_sector['href'],

'name': sub_sector.text,

'url': f"{root}{sub_sector['href']}"

})

return data_sub_sectors

def _extract_sub_sector_rankings(root, soup: BeautifulSoup):

data_sub_sectors_rankings = []

try:

entries = soup.find('div', {'class': 'donnees'}).find_all('tr')

except AttributeError:

logging.info(f'Failed extracting: {root}')

entries = []

for entry in entries:

data_sub_sectors_rankings.append({

'rank': entry.find('td').text,

'name': entry.find('a').text

})

return data_sub_sectors_rankings

if __name__ == '__main__':

url = 'https://www.bilansgratuits.fr/'

result = requests.get(url)

soup = BeautifulSoup(result.text, 'html.parser')

# Obtain all sector data.

data = get_main_links(url, soup)

pprint(data)

# Obtain all sub sectors

data = get_sub_sectors(url, data)

pprint(data)

df = pd.DataFrame(data)

print(df.columns)

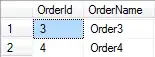

print(df.head())

print(df.iloc[0])

print(df.iloc[1])

df.to_csv('dftest.csv', sep=';', index=False, encoding='utf_8_sig')

Output

names ;Secteur ;Sous-Secteur ;Rankings

LIMAGRAIN EUROPE (63360) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;1

LIMAGRAIN (63360) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;2

TOP SEMENCE (26160) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;3

CTRE SEMENCE UNION COOPERATIVE AGRICOLE (37310) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;4

SEMENCES DU SUD (11400) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;5

KWS MOMONT (59246) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;6

TECHNISEM (49160) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;7

AGRI-OBTENTIONS (78280) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;8

LS PRODUCTION (75116) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;9

SAS VALFRANCE SEMENCES (60300) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;10

DURANCE HYBRIDES (13610) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;11

STRUBE FRANCE (60190) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;12

ID GRAIN (31330) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;13

SAGA VEGETAL (33121) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;14

SOC COOP AGRICOLE VALLEE RHONE VALGRAIN (26740) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;15

ALLIX SARL (33127) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;16

PAMPROEUF (79800) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;17

PERDIGUIER FOURRAGES (84310) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;18

PANAM FRANCE (31340) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;19

HENG SIENG (57260) ;A - Agriculture ;0111Z - Culture de céréales (à l'exception du riz), de légumineuses et de graines oléagineuses ;20

HM.CLAUSE (26800) ;A - Agriculture ;0113Z - Culture de légumes, de melons, de racines et de tubercules ;1

VILMORIN-MIKADO (49250) ;A - Agriculture ;0113Z - Culture de légumes, de melons, de racines et de tubercules ;2

SARL FERME DE LA MOTTE (41370) ;A - Agriculture ;0113Z - Culture de légumes, de melons, de racines et de tubercules ;3

RIJK ZWAAN FRANCE (30390) ;A - Agriculture ;0113Z - Culture de légumes, de melons, de racines et de tubercules ;4

LE JARDIN DE RABELAIS (37420) ;A - Agriculture ;0113Z - Culture de légumes, de melons, de racines et de tubercules ;5

RENAUD & FILS SARL (17800) ;A - Agriculture ;0113Z - Culture de légumes, de melons, de racines et de tubercules ;6

Edit

in order to print No classify to the rank or name you can change the _extract_sub_sector_rankings using:

def _extract_sub_sector_rankings(root, soup: BeautifulSoup):

data_sub_sectors_rankings = []

try:

entries = soup.find('div', {'class': 'donnees'}).find_all('tr')

except AttributeError:

print(f"\rFailedExtracting: {root}", )

return [{'rank': 'No classify', 'name': 'No classify'}]

for entry in entries:

data_sub_sectors_rankings.append({

'rank': entry.find('td').text,

'name': entry.find('a').text

})

return data_sub_sectors_rankings

Before I used logging.info, but the default logging level is warning to show up. If you would use logging.warning instead you would see all the links that failed. Instead of adding the No classify they got skipped in the original answer.

If you would like to give a different ranking or name you can adjust the return value.