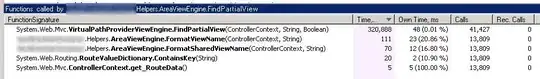

I'm trying to get just one line of this out. So I was hoping when I ran pytesseract, I'd get useable data out.

Instead, I'm getting strings like 'Ce eet il ae oe a on) os\n\nooo eo oo oo oom om om om)\n\n[OO COCO ORR OW OR PRP ODWWG\n\neyo fe) Fee ote) = = - = = eo me-e-)\n\n(Ss: oo ~7~oO 0 0\n\neB\n\n© te O fa ©\n\nOORFONONWR OW DFW NN\n\nVaso\nVES -5)\n1866\nnny\n1625\n1368\nLt\n1070\n898\n838\nwhey)\nom\na\nRie)\n15\n\nny,\n\n=ARAM= gksvlrwOlf\nDarth_Zipzap\naE a\njohnny478423\n=CNAPG _920831993\nOLOLUCTIIN AG\nRivDecartes\nfleadog406\nFormula13\n\nxL LongDubber\nDankdudledan\n_Trix_1740\n\nLUT engl)\n\n=MOPB= JP_Akatonbo\nPlutoh71689\nMakinHerSquirt\n\x0c'

I tried grey-scaling it to no avail. I thought given the sort of discrete columns here I'd be able to just split the string on spaces and newlines, but...no.

Any pointers in the right direction would be appreciated.

In previous experiments, I had a little trouble because of images like the little controller icons, and I was just able to detect and mask those before passing the image to tesseract. But in this image, tesseract is failing to identify the numbers in the columns pretty consistently.