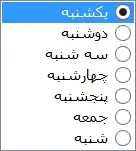

I am trying a regression problem with the following dataset (sinusoidal curve) of size 500

First, I tried with 2 dense layer with 10 units each

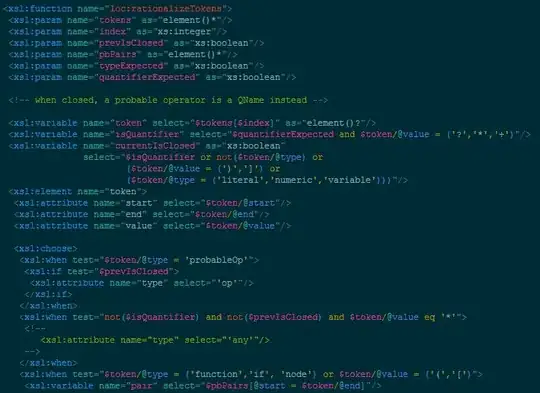

model = tf.keras.Sequential([

tf.keras.layers.Dense(10, activation='tanh'),

tf.keras.layers.Dense(10, activation='tanh'),

tf.keras.layers.Dense(1),

tfp.layers.DistributionLambda(lambda t: tfd.Normal(loc=t, scale=1.))

])

Trained with negative log likelihood loss as follows

model.compile(optimizer=tf.optimizers.Adam(learning_rate=0.01), loss=neg_log_likelihood)

model.fit(x, y, epochs=50)

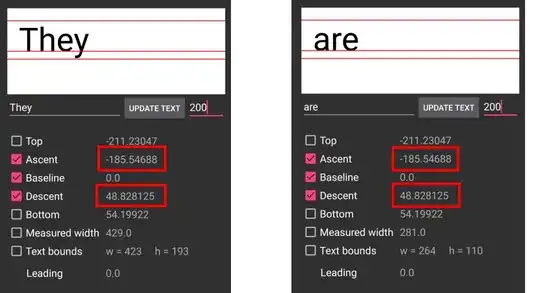

Next, I tried similar environment with DenseVariational

model = tf.keras.Sequential([

tfp.layers.DenseVariational(

10, activation='tanh', make_posterior_fn=posterior,

make_prior_fn=prior, kl_weight=1/N, kl_use_exact=True),

tfp.layers.DenseVariational(

10, activation='tanh', make_posterior_fn=posterior,

make_prior_fn=prior, kl_weight=1/N, kl_use_exact=True),

tfp.layers.DenseVariational(

1, activation='tanh', make_posterior_fn=posterior,

make_prior_fn=prior, kl_weight=1/N, kl_use_exact=True),

tfp.layers.DistributionLambda(lambda t: tfd.Normal(loc=t, scale=1.))

])

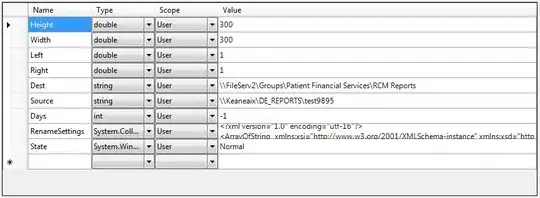

As the number of parameters approximately double with this, I have tried increasing dataset size and/or epoch size up to 100 times with no success. Results are usually as follows.

My questions is how do I get comparable results as that of Dense layer with DenseVariational? I have also read that it can be sensitive to initial values. Here is the link to full code. Any suggestions are welcome.