I have defined the function get_content to crawl data from https://www.investopedia.com/. I tried get_content('https://www.investopedia.com/terms/1/0x-protocol.asp') and it worked. However, the process seems to run infinitely on my Windows laptop. I checked that it runs well on Google Colab and Linux laptops.

Could you please elaborate why my function does not work in this parallel setting?

import requests

from bs4 import BeautifulSoup

from multiprocessing import dummy, freeze_support, Pool

import os

core = os.cpu_count() # Number of logical processors for parallel computing

headers = {'User-Agent': 'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:78.0) Gecko/20100101 Firefox/78.0'}

session = requests.Session()

links = ['https://www.investopedia.com/terms/1/0x-protocol.asp', 'https://www.investopedia.com/terms/1/1-10net30.asp']

############ Get content of a word

def get_content(l):

r = session.get(l, headers = headers)

soup = BeautifulSoup(r.content, 'html.parser')

entry_name = soup.select_one('#article-heading_3-0').contents[0]

print(entry_name)

############ Parallel computing

if __name__== "__main__":

freeze_support()

P_d = dummy.Pool(processes = core)

P = Pool(processes = core)

#content_list = P_d.map(get_content, links)

content_list = P.map(get_content, links)

Update1: I run this code in JupyterLab from Anaconda distribution. As you can see from below screenshot, the status is busy all the time.

Update2: The execution of the code can finish in Spyder, but it still returns no output.

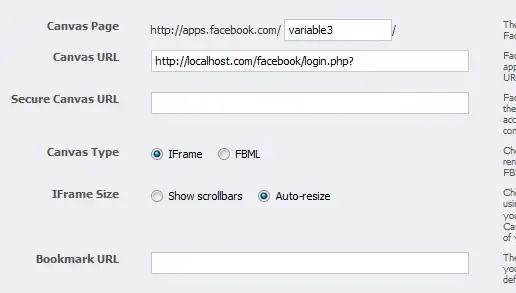

Update3: The code runs perfectly fine in Colab: