I'm following this tutorial https://blog.keras.io/building-autoencoders-in-keras.html specifically the convolutional example. I don't understand why if I change the loss function from binary_crossentropy to MSE it only works on fashion_mnist.

Using mnist, the loss drops after the first epoch and no longer varies. After the training, the predicted images on the test set are just black images. Using fashion_mnist it works perfectly.

import keras

from keras import layers

import keras.backend as K

input_img = keras.Input(shape=(28, 28, 1))

x = layers.Conv2D(16, (3, 3), activation='relu', padding='same')(input_img)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(x)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(x)

encoded = layers.MaxPooling2D((2, 2), padding='same')(x)

# at this point the representation is (4, 4, 8) i.e. 128-dimensional

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(encoded)

x = layers.UpSampling2D((2, 2))(x)

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(x)

x = layers.UpSampling2D((2, 2))(x)

x = layers.Conv2D(16, (3, 3), activation='relu')(x)

x = layers.UpSampling2D((2, 2))(x)

decoded = layers.Conv2D(1, (3, 3), activation='sigmoid', padding='same')(x)

autoencoder = keras.Model(input_img, decoded)

autoencoder.compile(optimizer='adam', loss='mse') # binary_crossentropy

from keras.datasets import mnist

from keras.datasets import fashion_mnist

import numpy as np

(x_train, _), (x_test, _) = mnist.load_data() # fashion_mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = np.reshape(x_train, (len(x_train), 28, 28, 1))

x_test = np.reshape(x_test, (len(x_test), 28, 28, 1))

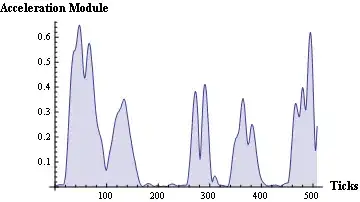

history = autoencoder.fit(x_train, x_train,

epochs=50,

batch_size=128,

shuffle=True,

validation_data=(x_test, x_test))