In this code snippet from TensorFlow tutorial Basic text classification,

model = tf.keras.Sequential([

layers.Embedding(max_features + 1, embedding_dim),

layers.Dropout(0.2),

layers.GlobalAveragePooling1D(),

layers.Dropout(0.2),

layers.Dense(1)])

As far as I understood, max_features is the size of vocabulary(with index 0 for padding and index 1 for OOV).

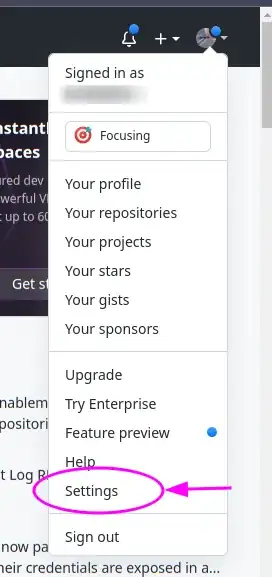

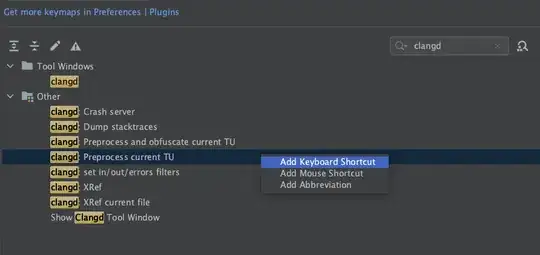

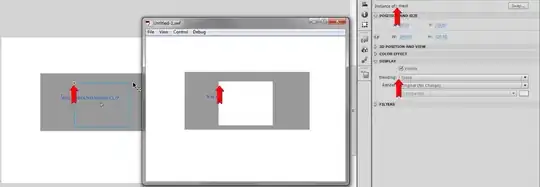

Also, I've done an experiment by setting layers.Embedding(max_features, embedding_dim), the tutorial can still successfully run through(screenshots below).

So why do we need input_dim=max_features + 1 here?