Assume a function M2S which takes a (small) matrix as an input and outputs a scalar, and applying M2S to each block of a (large) matrix. I would like to have an efficient solution with NumPy.

A solution I tested is:

import numpy as np

img_depth=np.random.randint(0,5,(480,640))

w_block=10

def M2S(img_block):

img_valid=img_block[img_block!=0]

if len(img_valid)==0: return None

return np.mean(img_valid)

out1=[[uu+w_block/2, vv+w_block/2, M2S(img_depth[vv:vv+w_block,uu:uu+w_block])]

for vv in range(0,img_depth.shape[0],w_block)

for uu in range(0,img_depth.shape[1],w_block) ]

(Note that I serialized the matrix to have a list of [column of block, row of block, M2S output] for my necessity.)

This computation took 43ms in my environment.

I also tried vectorization, but there was no speed improvement:

indices= np.indices(img_depth.shape)[:,0:img_depth.shape[0]:w_block,0:img_depth.shape[1]:w_block]

vM2S= np.vectorize(lambda v,u: M2S(img_depth[v:v+w_block,u:u+w_block]))

out2= np.dstack((indices[1]+w_block/2, indices[0]+w_block/2, vf_feat(indices[0], indices[1]))).reshape(-1,3)

Is there any better ideas to improve the computation speed?

(Edited)

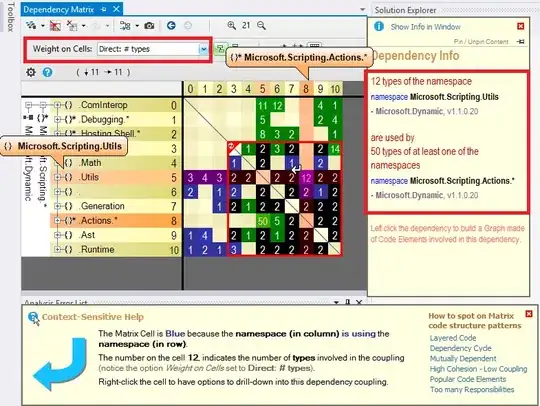

I draw a sketch to explain the above process:

The second step (serialize) can be computed quickly, so my question is mainly about the first step.