Edit:

Can map not also be used to parallelize I/O?

Indeed, you can read images and labels from a directory with map function. Assume this case:

list_ds = tf.data.Dataset.list_files(my_path)

def process_path(path):

### get label here etc. Images need to be decoded

return tf.io.read_file(path), label

new_ds = list_ds.map(process_path,num_parallel_calls=tf.data.experimental.AUTOTUNE)

Note that, now it is multi-threaded as num_parallel_calls has been set.

The advantage of interlave() function:

- Suppose you have a dataset

- With

cycle_length you can out that many elements from the dataset, i.e 5, then 5 elements are out from the dataset and a map_func can be applied.

- After, fetch dataset objects from newly generated objects,

block_length pieces of data each time.

In other words, interleave() function can iterate through your dataset while applying a map_func(). Also, it can work with many datasets or data files at the same time. For example, from the docs:

dataset = dataset.interleave(lambda x:

tf.data.TextLineDataset(x).map(parse_fn, num_parallel_calls=1),

cycle_length=4, block_length=16)

However, is there any benefit of using interleave over map in a

scenario such as the one below?

Both interleave() and map() seems a bit similar but their use-case is not the same. If you want to read dataset while applying some mapping interleave() is your super-hero. Your images may need to be decoded while being read. Reading all first, and decoding may be inefficient when working with large datasets. In the code snippet you gave, AFAIK, the one with tf.data.TFRecordDataset should be faster.

TL;DR interleave() parallelizes the data loading step by interleaving the I/O operation to read the file.

map() will apply the data pre-processing to the contents of the datasets.

So you can do something like:

ds = train_file.interleave(lambda x: tf.data.Dataset.list_files(directory_here).map(func,

num_parallel_calls=tf.data.experimental.AUTOTUNE)

tf.data.experimental.AUTOTUNE will decide the level of parallelism for buffer size, CPU power, and also for I/O operations. In other words, AUTOTUNE will handle the level dynamically at runtime.

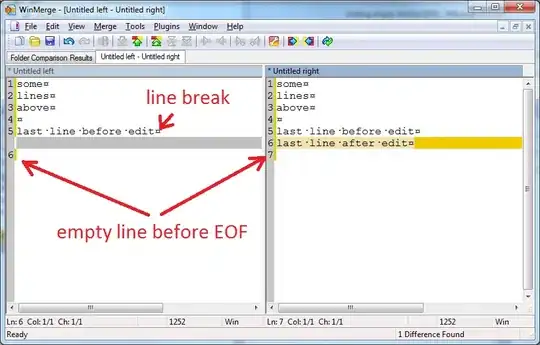

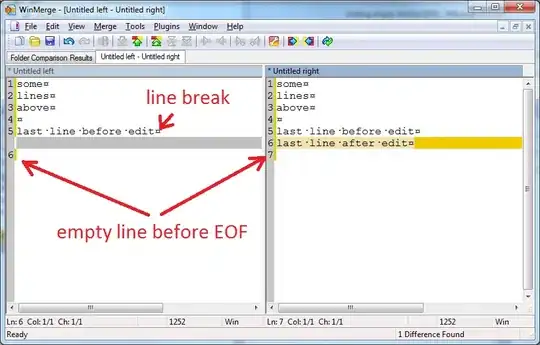

num_parallel_calls argument spawns multiple threads to utilize multiple cores for parallelizing the tasks. With this you can load multiple datasets in parallel, reducing the time waiting for the files to be opened; as interleave can also take an argument num_parallel_calls. Image is taken from docs.

In the image, there are 4 overlapping datasets, that is determined by the argument cycle_length, so in this case cycle_length = 4.

FLAT_MAP: Maps a function across the dataset and flattens the result. If you want to make sure order stays the same you can use this. And it does not take num_parallel_calls as an argument. Please refer docs for more.

MAP:

The map function will execute the selected function on every element of the Dataset separately. Obviously, data transformations on large datasets can be expensive as you apply more and more operations. The key point is, it can be more time consuming if CPU is not fully utilized. But we can use parallelism APIs:

num_of_cores = multiprocessing.cpu_count() # num of available cpu cores

mapped_data = data.map(function, num_parallel_calls = num_of_cores)

For cycle_length=1, the documentation states that the outputs of

interleave and flat_map are equal

cycle_length --> The number of input elements that will be processed concurrently. When set it to 1, it will be processed one-by-one.

INTERLEAVE: Transformation operations like map can be parallelized.

With parallelism of the map, at the top the CPU is trying to achieve parallelization in transformation, but the extraction of data from the disk can cause overhead.

Besides, once the raw bytes are read into memory, it may also be necessary to map a function to the data, which of course, requires additional computation. Like decrypting data etc. The impact of the various data extraction overheads needs to be parallelized in order to mitigate this with interleaving the contents of each dataset.

So while reading the datasets, you want to maximize:

Source of image: deeplearning.ai