I am trying to crop a selected portion of NSImage which is fitted as per ProportionallyUpOrDown(AspectFill) Mode. I am drawing a frame using mouse dragged event like this:

class CropImageView: NSImageView {

var startPoint: NSPoint!

var shapeLayer: CAShapeLayer!

var flagCheck = false

var finalPoint: NSPoint!

override init(frame frameRect: NSRect) {

super.init(frame: frameRect)

}

required init?(coder: NSCoder) {

super.init(coder: coder)

}

override func draw(_ dirtyRect: NSRect) {

super.draw(dirtyRect)

}

override var image: NSImage? {

set {

self.layer = CALayer()

self.layer?.contentsGravity = kCAGravityResizeAspectFill

self.layer?.contents = newValue

self.wantsLayer = true

super.image = newValue

}

get {

return super.image

}

}

override func mouseDown(with event: NSEvent) {

self.startPoint = self.convert(event.locationInWindow, from: nil)

if self.shapeLayer != nil {

self.shapeLayer.removeFromSuperlayer()

self.shapeLayer = nil

}

self.flagCheck = true

var pixelColor: NSColor = NSReadPixel(startPoint) ?? NSColor()

shapeLayer = CAShapeLayer()

shapeLayer.lineWidth = 1.0

shapeLayer.fillColor = NSColor.clear.cgColor

if pixelColor == NSColor.black {

pixelColor = NSColor.color_white

} else {

pixelColor = NSColor.black

}

shapeLayer.strokeColor = pixelColor.cgColor

shapeLayer.lineDashPattern = [1]

self.layer?.addSublayer(shapeLayer)

var dashAnimation = CABasicAnimation()

dashAnimation = CABasicAnimation(keyPath: "lineDashPhase")

dashAnimation.duration = 0.75

dashAnimation.fromValue = 0.0

dashAnimation.toValue = 15.0

dashAnimation.repeatCount = 0.0

shapeLayer.add(dashAnimation, forKey: "linePhase")

}

override func mouseDragged(with event: NSEvent) {

let point: NSPoint = self.convert(event.locationInWindow, from: nil)

var newPoint: CGPoint = self.startPoint

let xDiff = point.x - self.startPoint.x

let yDiff = point.y - self.startPoint.y

let dist = min(abs(xDiff), abs(yDiff))

newPoint.x += xDiff > 0 ? dist : -dist

newPoint.y += yDiff > 0 ? dist : -dist

let path = CGMutablePath()

path.move(to: self.startPoint)

path.addLine(to: NSPoint(x: self.startPoint.x, y: newPoint.y))

path.addLine(to: newPoint)

path.addLine(to: NSPoint(x: newPoint.x, y: self.startPoint.y))

path.closeSubpath()

self.shapeLayer.path = path

}

override func mouseUp(with event: NSEvent) {

self.finalPoint = self.convert(event.locationInWindow, from: nil)

}

}

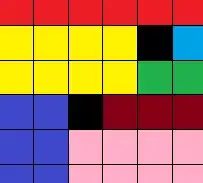

and selected this area as shown in picture using black dotted line:

My Cropping Code logic is this:

// resize Image Methods

extension CropProfileView {

func resizeImage(image: NSImage) -> Data {

var scalingFactor: CGFloat = 0.0

if image.size.width >= image.size.height {

scalingFactor = image.size.width/cropImgView.size.width

} else {

scalingFactor = image.size.height/cropImgView.size.height

}

let width = (self.cropImgView.finalPoint.x - self.cropImgView.startPoint.x) * scalingFactor

let height = (self.cropImgView.startPoint.y - self.cropImgView.finalPoint.y) * scalingFactor

let xPos = ((image.size.width/2) - (cropImgView.bounds.midX - self.cropImgView.startPoint.x) * scalingFactor)

let yPos = ((image.size.height/2) - (cropImgView.bounds.midY - (cropImgView.size.height - self.cropImgView.startPoint.y)) * scalingFactor)

var croppedRect: NSRect = NSRect(x: xPos, y: yPos, width: width, height: height)

let imageRef = image.cgImage(forProposedRect: &croppedRect, context: nil, hints: nil)

guard let croppedImage = imageRef?.cropping(to: croppedRect) else {return Data()}

let imageWithNewSize = NSImage(cgImage: croppedImage, size: NSSize(width: width, height: height))

guard let data = imageWithNewSize.tiffRepresentation,

let rep = NSBitmapImageRep(data: data),

let imgData = rep.representation(using: .png, properties: [.compressionFactor: NSNumber(floatLiteral: 0.25)]) else {

return imageWithNewSize.tiffRepresentation ?? Data()

}

return imgData

}

}

With this cropping logic i am getting this output:

I think as image is AspectFill thats why its not getting cropped in perfect size as per selected frame. Here if you look at output: xpositon & width & heights are not perfect. Or probably i am not calculating these co-ordinates properly. Let me know the faults probably i am calculating someting wrong.