Using an iPhone camera with a TrueDepth sensor I am able to capture accurate depth data in images of my face. I am capturing depth from the front, left and right sides (about 30 degrees rotation) and with the head tilted up a bit to capture under the chin (so 4 depth images in total). We are only capturing depth here, so no color information. We are cropping out unimportant data by using an ellipse frame

We are also using ARKit to give us the transform of the face anchor which is the same as the transform of the face, ref: https://developer.apple.com/documentation/arkit/arfaceanchor. It isn't possible to capture a depth image and the face transform at the same time, since they come from different capture sessions. So we have to take the depth image, and then quickly switch sessions while the user holds their face still to get the face anchor transforms. The world alignment is set the .camera so the face anchor transform should be relative to the camera, not the world origin.

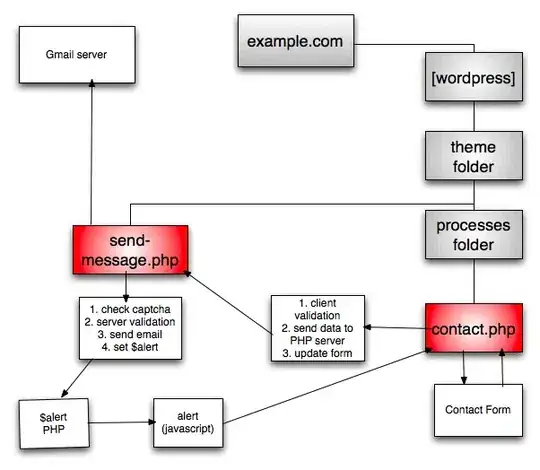

We end up with 4 point clouds that look like this: left-right: chin up, left 30, front on, right 30

We also end up with 4 transforms. We are trying to stitch they point clouds back together to make a smooth mesh of the face using open3d in python.

The process so far is as follows:

- read point clouds and transforms

- apply inverse transforms to point clouds to return to original position w.r.t camera

I was expecting these point clouds to roughly be at the same position, but this is happening instead:

As you can see the faces are still offset from one another:

Am I using the transforms wrong?

The python code and example point clouds and transforms are here: https://github.com/JoshPJackson/FaceMesh but the important bit is below:

dir = './temp4/'

frontPcd = readPointCloud('Front.csv', dir)

leftPcd = readPointCloud('Left.csv', dir)

rightPcd = readPointCloud('Right.csv', dir)

chinPcd = readPointCloud('Chin.csv', dir)

frontTransform = readTransform('front_transform.csv', dir)

leftTransform = readTransform('left_transform.csv', dir)

rightTransform = readTransform('right_transform.csv', dir)

chinTransform = readTransform('chin_transform.csv', dir)

rightPcd.transform(np.linalg.inv(rightTransform))

leftPcd.transform(np.linalg.inv(leftTransform))

frontPcd.transform(np.linalg.inv(frontTransform))

chinPcd.transform(np.linalg.inv(chinTransform))

I am expecting to get all of the point clouds merging together so I can remove duplicate vertices and then make a mesh