I have searched many websites and articles but not found any perfect answer. I am using eks version 1.18. I can see a few of the pods are "Evicted", but when trying to check the node I can see the error "(combined from similar events): failed to garbage collect required amount of images. Wanted to free 6283487641 bytes, but freed 0 bytes".

Is there any way we can find the reason why it's failing? or how to fix this issue? Any suggestions are most welcome.

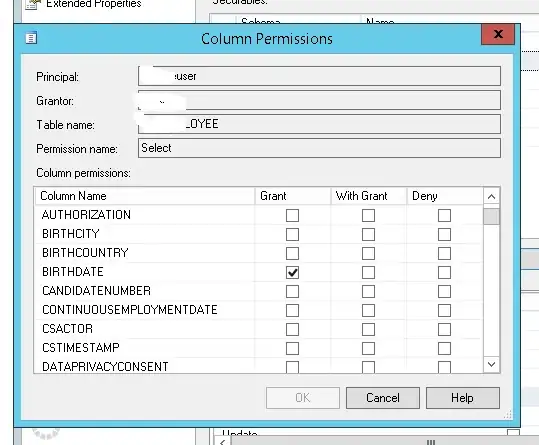

I can see the disk "overlays" filesystem is almost full within a few hours. I am not sure what's going on. The below screenshot shows my memory utilization.