I am working on a project with the goal of extracting structured data from a series of tables captured in images.

I have achieved some success adapting the process outlined in this extremely helpful medium post.

As best I understand, this program works by creating a contour mask, of sorts, to outline the borders of a table. Here is the relevant code performing that function:

#Load image as numpy array

img = np.array(img)

#Threshold image to binary image

thresh,img_bin = cv2.threshold(img,128,255,cv2.THRESH_BINARY |cv2.THRESH_OTSU)

#inverting the image

img_bin = 255-img_bin

# Length(width) of kernel as 100th of total width

kernel_len = np.array(img).shape[1]//100

# Defining a vertical kernel to detect all vertical lines of image

ver_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (1, kernel_len))

# Defining a horizontal kernel to detect all horizontal lines of image

hor_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (kernel_len, 1))

# A kernel of 2x2

kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (2, 2))

#Use vertical kernel to detect and save the vertical lines in a jpg

image_1 = cv2.erode(img_bin, ver_kernel, iterations=3)

vertical_lines = cv2.dilate(image_1, ver_kernel, iterations=3)

#Use horizontal kernel to detect and save the horizontal lines in a jpg

image_2 = cv2.erode(img_bin, hor_kernel, iterations=3)

horizontal_lines = cv2.dilate(image_2, hor_kernel, iterations=3)

# Combine horizontal and vertical lines in a new third image, with both having same weight.

img_vh = cv2.addWeighted(vertical_lines, 0.5, horizontal_lines, 0.5, 0.0)

#Eroding and thesholding the image

img_vh = cv2.erode(~img_vh, kernel, iterations=2)

thresh, img_vh = cv2.threshold(img_vh,128,255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)

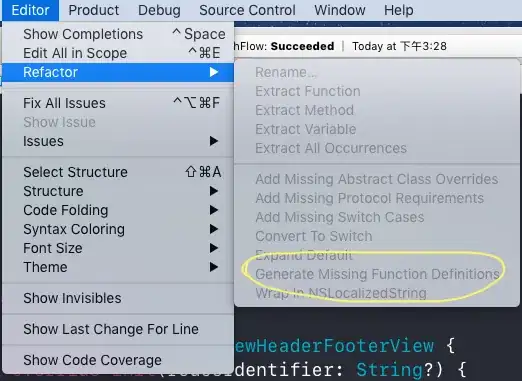

This process produces numpy array that can be interpreted as an image like this:

From there, the program can identify the table cells outlined on four sides by the contour mask.

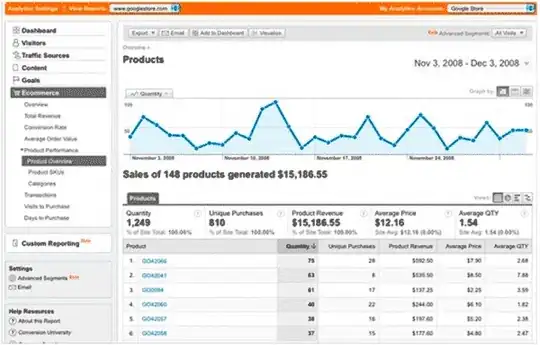

Unfortunately, many of the tables that I seek to process, including the one above lack perfect border formatting. The left-most column above lacks a left border (there is still data inside it). Other tables I have lack internal borders at all, relying on white space to format the data for the human eye.

As best I can tell, my path forward here is to add the missing contour lines myself using some kind of logic based on visual elements on the page. In the first example, I could attempt to add a left-side vertical line to the contour mask based on the position of the other contours. In the second example, I could try to add table borders based on consistencies in the position of the text.

That being said, this strategy would require a significant amount of logic, and may not be flexible enough to deal with the various table formats I may come into contact with.

Am I approaching this challenge with the right strategy? Is there a deployable software solution that I am not seeing? Ideally, I'd like this to be as automated as possible.

Any help would be greatly appreciated!