The docs are accurate, they just don't tell you what will happen if you actually try to use an uncondensed distance matrix.

The function raises a warning but still runs because it first tries to convert input into a numpy array. This creates a 2-D array from your 2-D DataFrame while at the same time recognizing that this likely isn't the expected input based on the array dimensions and symmetry.

Depending on the complexity (e.g. cluster separation, number of clusters, distribution of data across clusters) of your input data, the clustering may still look like it succeeds in generating a suitable dendrogram, as you noted. This makes sense conceptually because the result is a clustering of n- similarity vectors which may be well-separated in simple cases.

For example, here is some synthetic data with 150 observations and 2 clusters:

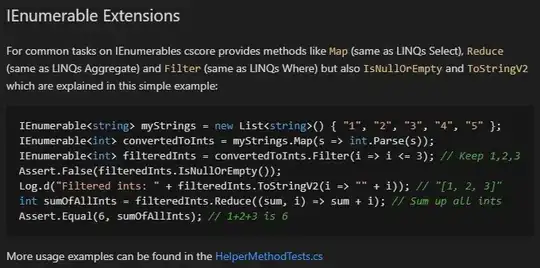

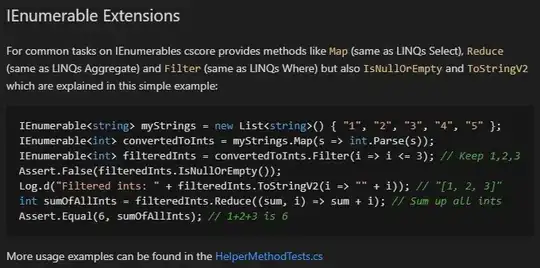

import pandas as pd

from scipy.spatial.distance import cosine, pdist, squareform

np.random.seed(42) # for repeatability

a = np.random.multivariate_normal([10, 0], [[3, 1], [1, 4]], size=[100,])

b = np.random.multivariate_normal([0, 20], [[3, 1], [1, 4]], size=[50,])

obs_df = pd.DataFrame(np.concatenate((a, b),), columns=['x', 'y'])

obs_df.plot.scatter(x='x', y='y')

Z = linkage(obs_df, 'ward')

fig = plt.figure(figsize=(8, 4))

dn = dendrogram(Z)

If you generate a similarity matrix, this is an n x n matrix that could still be clustered as if it were n vectors. I can't plot 150-D vectors, but plotting the magnitude of each vector and then the dendrogram seems to confirm a similar clustering.

def similarity_func(u, v):

return 1-cosine(u, v)

dists = pdist(obs_df, similarity_func)

sim_df = pd.DataFrame(squareform(dists), columns=obs_df.index, index=obs_df.index)

sim_array = np.asarray(sim_df)

sim_lst = []

for vec in sim_array:

mag = np.linalg.norm(vec,ord=1)

sim_lst.append(mag)

pd.Series(sim_lst).plot.bar()

Z = linkage(sim_df, 'ward')

fig = plt.figure(figsize=(8, 4))

dn = dendrogram(Z)

What we're really clustering here is a vector whose components are similarity measures of each of the 150 points. We're clustering a collection of each point's intra- and inter-cluster similarity measures. Since the two clusters are different sizes, a point in one cluster will have a rather different collection of intra- and inter-cluster similarities relative to a point in the other cluster. Hence, we get two primary clusters that are proportionate to the number of points in each cluster just as we did in the first step.