let A be an MxN matrix with entries a_ij in {0, ..., n-1}.

we can think of the entries in A as a rectangular grid that has been n-colored.

I am interested in partitioning each colored region into rectangles, in such a way that the number of rectangles is minimized. That is, I want to produce n sets of quadruples

L_k = {(i, j, w, h) | a_xy = k forall i <= x < i + w, j <= y < j + h}

satisfying the condition that every a_ij belongs to exactly one rectangle and all of the rectangles are disjoint. Furthermore, the sum L_0 + ... + L_(n-1) is minimized.

Obviously, minimizing each of the L_k can be done independently, but there is also a requirement that this happen extremely fast. Assume this is a real-time application. It may be the case that since the sets are disjoint, sharing information between the L_ks speeds things up more than doing everything in parallel. n can be small (say, <100) and M and N can be large.

I assume there is a dynamic programming approach to this, or maybe there is a way to rephrase it as a graph problem, but it is not immediately obvious to me how to approach this.

EDIT:

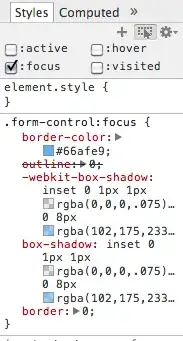

There seems to be some confusion about what I mean. Here is a picture to help illustrate.

Imagine this is a 10x10 matrix with red = 0, green = 1, and blue = 2. draw the black boxes like so, minimizing the number of boxes. The output here would be

L_0 = {(0,8,2,2),(1,7,2,1),(2,8,1,1),(4,5,4,2),(6,7,2,2)}

L_1 = {(0,0,4,4),(4,0,6,2),(6,2,2,3),(8,8,2,2)}

L_2 = {(0,4,4,3),(0,7,1,1),(2,9,6,1),(3,7,3,2),(4,2,2,4),(8,2,2,6)}