I am new to deep learning. I am working on a CT-scan medical images. I want to use UNet architecture to predict the image segmentation. I have successfully implemented the UNet, however, my prediction is completely black. I think it is because there are images, for which the corresponding ground truth is black (quite a lot of images). So, I suppose it might cause a problem.

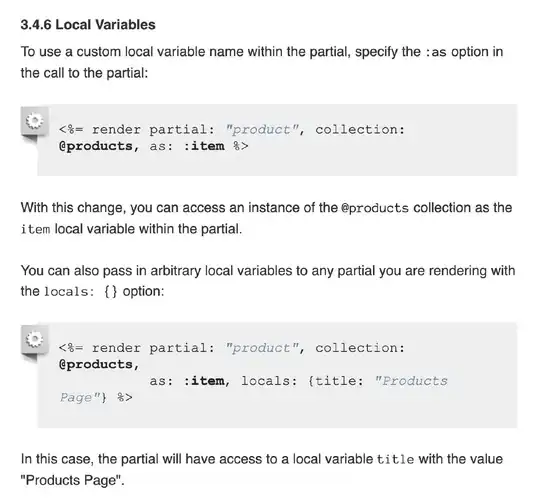

If the entire mask is black that implies there are no desired object in the image. An example image is below;

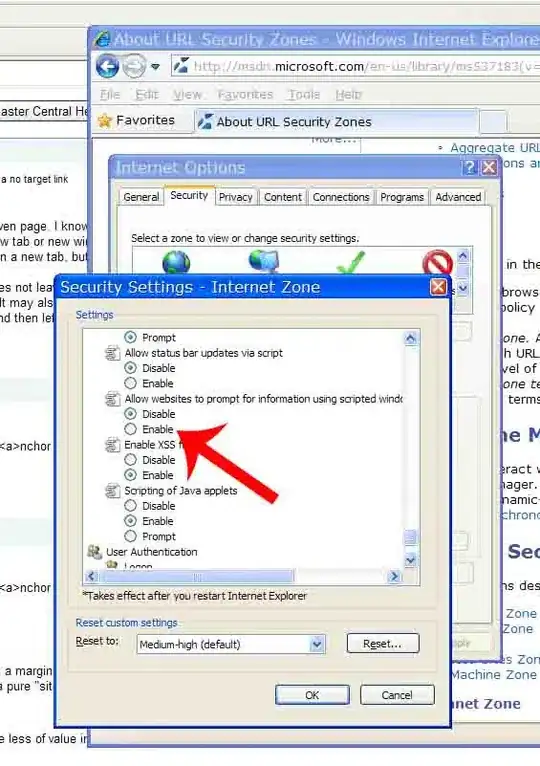

The below is the corresponding ground truth.

I am not sure how to deal with this situation. Should I remove all the (image, ground truth) pairs? CT images are volumetric images. So when my model predict the segmentation in a new test set, it should also detect images with no desired object in it. I would appreciate if someone guide me in this.

dataset: https://www.doc.ic.ac.uk/~rkarim/la_lv_framework/wall/index.html