Say I have many similar pdf files as the one from here:

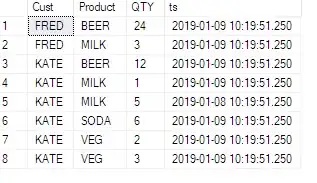

I woudld like to extract the following table and save as excel file:

I'm able to do extract table and save excel file manually with package excalibur.

After installing Excalibur with pip3, I initialize the metadata database using:

$ excalibur initdb

And then start the webserver using:

$ excalibur webserver

Then go to http://localhost:5000 and start extracting tabular data from PDFs.

I wonder if it's possible to automatically do that with python script for multiple pdf files with packages such as excalibur-py, camelot, pdfminer, etc, since the size and position of table are fixed for same city's reports.

You may download other report files from this link.

Many thanks at advance.