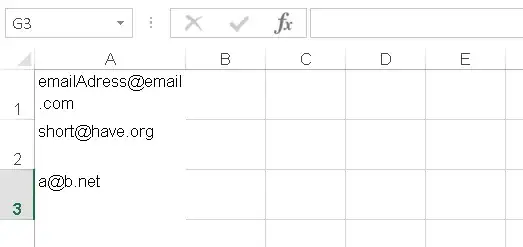

I want to split each field below into separate columns, and I want to do it without specifying column names, since I am processing a lot of files together, one after another, and each file has different number of columns. The goal is to read the each file in to a dataframe, with its filename as a dataframe name. I am using a dictionary for the same:

import pandas as pd

import zipfile

import re

Tables = {}

with zipfile.ZipFile('*.zip') as z:

for filename in z.namelist():

df_name = filename.split(".")[1]

if df_name == 'hp':

with (z.open(filename)) as f:

content = f.read().decode('utf-8')

content = NewLineCorrection(content)

df= pd.DataFrame(content)

cols = list(df[0][0])

df[0] = list(map(lambda el:[el], df[0]))

#df[0] = df[0].split(',')

print(df.head())

#df.columns = df.iloc[0]

#df = df.drop(index=0).reset_index(drop=True)

#Tables[df_name] = df

def NewLineCorrection(content):

corrected_content = ( re.sub(r'"[^"]*"',

lambda x:

re.sub(r'[\r\n\x0B\x0C\u0085\u2028\u2029]',

'',

x.group()),

content) )

corrected_content = corrected_content.replace('"', '')

corrected_content = corrected_content.replace('||@@##', ',')

ContentList = list(corrected_content.splitlines())

return ContentList

The .split() function is not working for me for some reason and I am not sure how to find out why.