I am trying to parallelize a code on a many-core system. While investigating scaling bottlenecks, I ended up removing everything down to a (nearly) empty for-loop, and finding that the scaling is still only 75% at 28 cores. The example below cannot incur any false sharing, heap contention, or memory bandwidth issues. I see similar or worse effects on a number of machines running Linux or Mac, with physical core counts from 8 up to 56, all with the processors otherwise idling.

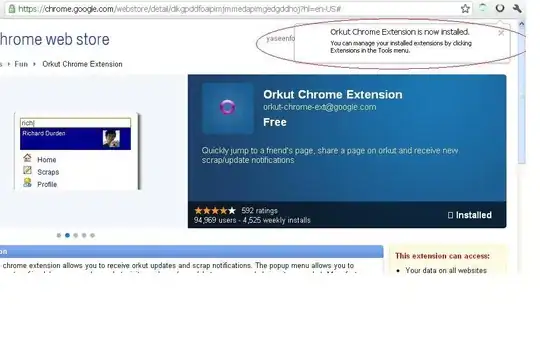

The plot shows a test on a dedicated HPC Linux node. It is a "weak scaling" test: the work load is proportional to the number of workers, and the vertical axis shows the rate-of-work done by all the threads combined, scaled to the ideal maximum for the hardware. Each thread runs 1 billion iterations of an empty for-loop. There is one trial for each thread count between 1 and 28. The run time is about 2 seconds per thread, so overhead from thread creation is not a factor.

Could this be the OS getting in our way? Or power consumption maybe? Can anybody produce an example of a calculation (however trivial, weak or strong) that exhibits 100% scaling on a high-core count machine?

Below is the C++ code to reproduce:

#include <vector>

#include <thread>

int main()

{

auto work = [] ()

{

auto x = 0.0;

for (auto i = 0; i < 1000000000; ++i)

{

// NOTE: behavior is similar whether or not work is

// performed here (although if no work is done, you

// cannot use an optimized build).

x += std::exp(std::sin(x) + std::cos(x));

}

std::printf("-> %lf\n", x); // make sure the result is used

};

for (auto num_threads = 1; num_threads < 40; ++num_threads)

{

auto handles = std::vector<std::thread>();

for (auto i = 0; i < num_threads; ++i)

{

handles.push_back(std::thread(work));

}

auto t0 = std::chrono::high_resolution_clock::now();

for (auto &handle : handles)

{

handle.join();

}

auto t1 = std::chrono::high_resolution_clock::now();

auto delta = std::chrono::duration<double, std::milli>(t1 - t0);

std::printf("%d %0.2lf\n", num_threads, delta.count());

}

return 0;

}

To run the example, make sure to compile without with optimizations: g++ -O3 -std=c++17 weak_scaling.cpp. Here is Python code to reproduce the plot (assumes you pipe the program output to perf.dat).

import numpy as np

import matplotlib.pyplot as plt

threads, time = np.loadtxt("perf.dat").T

a = time[0] / 28

plt.axvline(28, c='k', lw=4, alpha=0.2, label='Physical cores (28)')

plt.plot(threads, a * threads / time, 'o', mfc='none')

plt.plot(threads, a * threads / time[0], label='Ideal scaling')

plt.legend()

plt.ylim(0.0, 1.)

plt.xlabel('Number of threads')

plt.ylabel('Rate of work (relative to ideal)')

plt.grid(alpha=0.5)

plt.title('Trivial weak scaling on Intel Xeon E5-2680v4')

plt.show()

Update -- here is the same scaling on a 56-core node, and that node's architecture:

Update -- there are concerns in the comments that the build was unoptimized. The result is very similar if work is done in the loop, the result is not discarded, and -O3 is used.