Root Mean Square Error (RMSE) is the standard deviation of the prediction errors. prediction errors are a measure of how far from the regression line data points are; RMSE is a measure of how spread out these residuals are. In other words, it tells you how concentrated the data is around the line of best fit. Root mean square error is commonly used in climatology, forecasting, and regression analysis to verify experimental results.

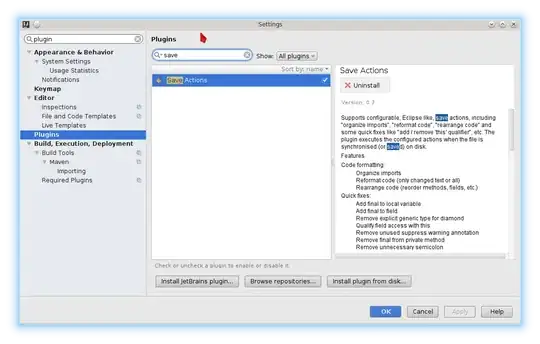

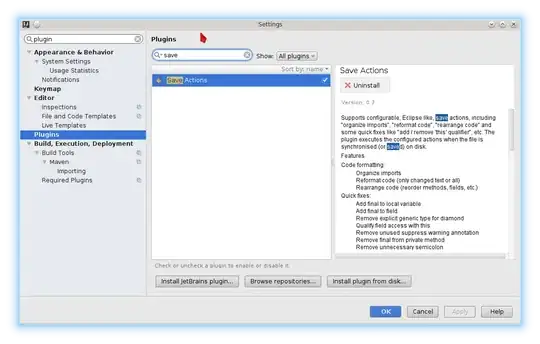

The formula is:

Where:

Where:

f = forecasts (expected values or unknown results),

o = observed values (known results).

The bar above the squared differences is the mean (similar to x̄). The same formula can be written with the following, slightly different, notation:

Where:

Σ = summation (“add up”)

(zfi – Zoi)2 = differences, squared

N = sample size.

You can use which ever method you want as both reflects the same and "R" that you are refering to is pearson coefficient that defines the variance amount in the data

Coming to Question2 a good rmse value is always depends on the upper and lower bound of your rmse and a good value should always be smaller that gives less probe of error