Following results are produced on Intel (haswell) x86_64. Assuming we have an integer array, int32_t A[32]={0}, consider the following function where func(&A[i]) is being called from two different threads.

void func(int32_t *elem) {

for (int i = 0; i < 10000; i++)

*elem=*elem+1;

}

Assume Thread1 calls func(&A[0]), Consider following three scenarios for calling func on Thread2:

Thread2 calls func(&A[16]): There will be no issues as A[0] and A[16] reside on separate cache-lines, and final results is correct A[0]=10000, A[16]=10000

Thread2 calls func(&A[1]): There is performance hit due false sharing and A[0], A[1] residing on the same cache-line. However final results are correct A[0]=10000,A[1]=10000

Thread2 calls func(&A[0]): we're going to get wrong results due to a race condition, i.e. A[0]<20000

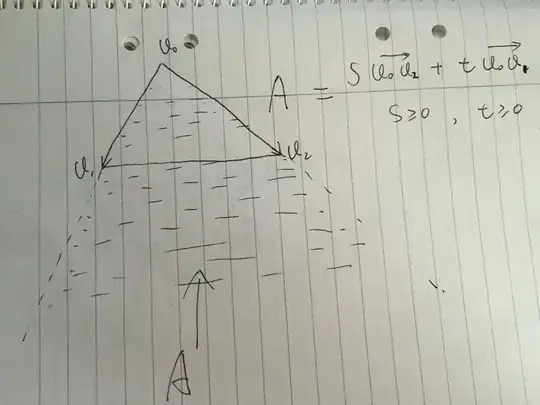

Question: since the cache-lines being addressed in the two threads are the same in scenarios 2 and 3, so why cache coherency protocol like MESI does not protect the results for scenario 3, whereas it's ensuring that results are correct for scenario 2.

Thanks