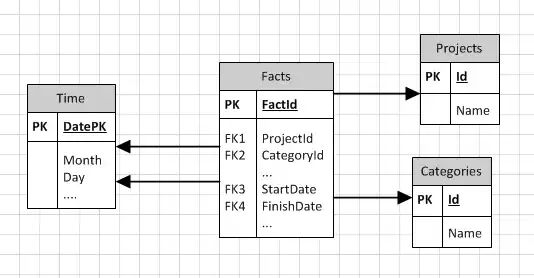

Suppose we have an array of a list containing indices. Each row (i.e. array) is associated to a specific user id. The algorithm only stores indices if the user appears more than once in the data, hence I use a filter function if the length is > 1 (user_split_indices=list(filter (lambda x: len(x)>1, user_split_indices)))

I have calculated the permutation for each list in the array. Note that the last element in the list must not contain duplicates after generating the permutation - hence I use drop_duplicates(subset=u_data_len-1, keep="first") on prem_ dataframe.

def _groupby_user(user_indices, order):

sort_kind = "mergesort" if order else "quicksort"

users, user_position, user_counts = np.unique(user_indices,

return_inverse=True,

return_counts=True)

user_split_indices = np.split(np.argsort(user_position, kind=sort_kind),

np.cumsum(user_counts)[:-1])

return user_split_indices

def split_by_num_new(data, k):

temp_indices = pd.DataFrame()

user_indices = data.user.to_numpy()

user_split_indices = _groupby_user(user_indices, True)

user_split_indices=list(filter (lambda x: len(x)>1, user_split_indices))

for u_data in user_split_indices:

u_data_len = len(u_data)

perm_ = pd.DataFrame(itertools.permutations(u_data)).drop_duplicates(subset=u_data_len-1, keep="first").set_index(u_data_len-1).stack().reset_index().rename(columns={'level_1': 'user_',u_data_len-1:'ind',k-1:'label_ind'})

temp_indices = pd.concat([temp_indices,perm_],axis=0)

return temp_indices,user_split_indices

The function is called using the following code below:

data=data.reset_index()

temp_indices,user_ind = split_by_num_new(data,k=1)

The input data is shown below:

Note that the index must be resetted, so that the index in dataset matches output the dataframe after grouping the user column.

An example of the output table temp_indices:

The part of the code that I am trying to speed up is the loop in the def split_by_num_new(data, k) function when the data increases to over a 2 million rows:

for u_data in user_split_indices:

u_data_len = len(u_data)

perm_ = pd.DataFrame(itertools.permutations(u_data)).drop_duplicates(subset=u_data_len-1, keep="first").set_index(u_data_len-1).stack().reset_index().rename(columns={'level_1': 'user_',u_data_len-1:'ind',k-1:'label_ind'})

temp_indices = pd.concat([temp_indices,perm_],axis=0)

return temp_indices,user_split_indices

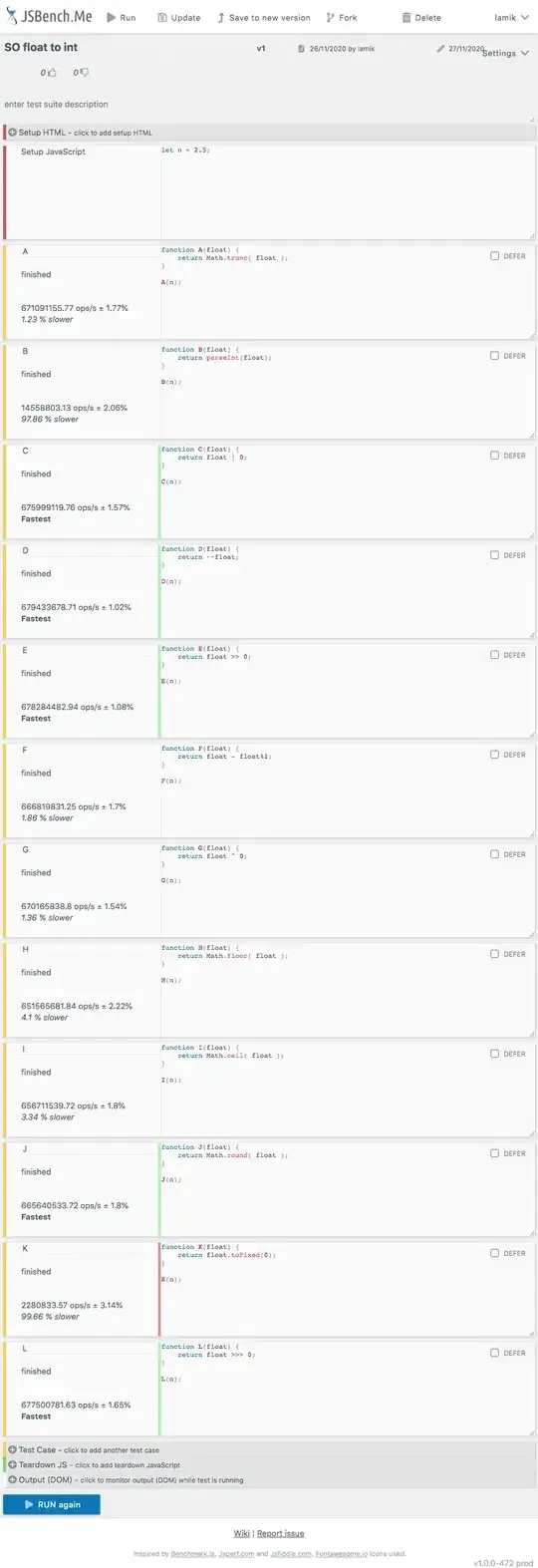

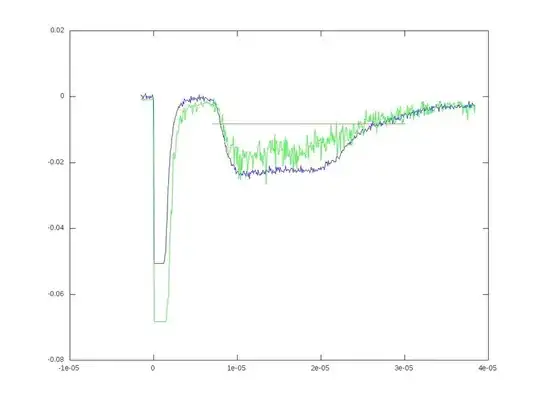

Below is the outputs in details as well as the time breakdown:

def split_by_num_new(data, k):

temp_indices = pd.DataFrame()

user_indices = data.user.to_numpy()

user_split_indices = _groupby_user(user_indices, True)

user_split_indices=list(filter (lambda x: len(x)>1, user_split_indices))

loop_start = timeit.default_timer()

for u_data in user_split_indices:

u_data_len = len(u_data)

perm_ = pd.DataFrame(itertools.permutations(u_data))

print(perm_)

perm_ = perm_.drop_duplicates(subset=u_data_len-1, keep="first")

print(perm_)

perm_=perm_.set_index(u_data_len-1)

print(perm_)

perm_=perm_.stack().reset_index()

print(perm_)

perm_=perm_.rename(columns={'level_1': 'user_',u_data_len-1:'ind',k-1:'label_ind'})

print(perm_)

concat_start = timeit.default_timer()

temp_indices = pd.concat([temp_indices,perm_],axis=0)

concat_stop = timeit.default_timer()

print('concat Time Completed at : ', concat_stop - concat_start)

loop_stop = timeit.default_timer()

print('Loop Time Completed at : ', loop_stop - loop_start)

return temp_indices,user_split_indices