The above mentioned package does not implement a inverse nfft

The ndft is f_hat @ np.exp(-2j * np.pi * x * k[:, None])

The ndft_adjoint is f @ np.exp(2j * np.pi * k * x[:, None])

Let k = -N//2 + np.arange(N), and A = np.exp(-2j * np.pi * k * k[:, None])

A @ np.conj(A) = N * np.eye(N) (checked numerically)

Thus, for random x the adjoint transformation is equals to the inverse transform. The given reference paper provides a few options, I implemented Algorithm 1 CGNE, from page 9

import numpy as np # I have the habit to use np

def nfft_inverse(x, y, N, w = 1, L=100):

f = np.zeros(N, dtype=np.complex128);

r = y - nfft.nfft(x, f);

p = nfft.nfft_adjoint(x, r, N);

r_norm = np.sum(abs(r)**2 * w)

for l in range(L):

p_norm = np.sum(abs(p)**2 * w);

alpha = r_norm / p_norm

f += alpha * w * p;

r = y - nfft.nfft(x, f)

r_norm_2 = np.sum(abs(r)**2 * w)

beta = r_norm_2 / r_norm

p = beta * p + nfft.nfft_adjoint(x, w * r, N)

r_norm = r_norm_2;

#print(l, r_norm)

return f;

The algorithm converges slowly and poorly

plt.figure(figsize=(14, 7))

plt.title('inverse nfft error histogram')

#plt.scatter(x, f_hat, label='f(x)')

h_hat = nfft_inverse(x, f, N, L = 1)

plt.hist(f_hat - numpy.real(h_hat), bins=30, label='1 iteration')

h_hat = nfft_inverse(x, f, N, L = 10)

plt.hist(f_hat - numpy.real(h_hat), bins=30, label='10 iterations')

h_hat = nfft_inverse(x, f, N, L = 1000)

plt.hist(f_hat - numpy.real(h_hat), bins=30, label='1000 iterations')

plt.xlabel('error')

plt.ylabel('occurrencies')

plt.legend()

I tried also to use scipy minimization, to minimize the residual ||nfft(x, f) - y||**2 explicitly

import numpy as np # the habit

import scipy.optimize

def nfft_gradient_descent(x, y, N, L=10, tol=1e-8, method='CG'):

'''

compute $min || A @ f - y ||**2 via gradient descent

the gradient is

`A^H @ (A @ f - y)`

Multiply by A using nfft.nfft

'''

def cost(fpack):

f = fpack[0::2] + 1j * fpack[1::2]

u = np.sum(np.abs(nfft.nfft(x, f) - y)**2)

return u

def grad(fpack):

f = fpack[0::2] + 1j * fpack[1::2]

r = nfft.nfft(x, f) - y

u = nfft.nfft_adjoint(x, r, N)

return np.stack([np.real(u), np.imag(u)], axis=1).reshape(-1)

x0 = np.zeros([N, 2])

result = scipy.optimize.minimize(cost, x0=x0, jac=grad, tol=tol, method=method, options={'maxiter': L, 'disp': True})

return result.x[0::2] + 1j * result.x[1::2];

The results look similar, you can try by different methods or parameters by your self if you want. But I believe the transformation is ill conditioned because transformed residual is considerably reduced but the residual on the reconstructed values is large.

Edit 1

Is it basically true that you found that the there is not a true inverse to the algorithm then? I cannot obtain my original points? x != nfft(nfft_adjoint(x))

Please check the section 2.3 of the reference paper

Numerical comparison

Cris Luengo answer metioned another possibility, that is, instead of reconstructing f at the points x, you may reconstruct a resampled version at equidistant points using the ifft. So you already have three options, and I will do a quick comparison. Bear in mind that the plot shown there is based on a NFFT computed in 16k samples, while here I am using 1k samples.

Since the FFT method uses different points we cannot compare to the original signal, what I will do is to compare with the harmonic function without the noise. The variance of the noise is 0.01, so an exact reconstruction would lead to this mean squared error.

N = 1024

x = -0.5 + numpy.random.rand(N)

f_hat = numpy.sin(10 * 2 * numpy.pi * x) + .1*numpy.random.randn( N ) #Add some 'y' randomness to the sample

k = - N // 2 + numpy.arange(N)

f = nfft.nfft(x, f_hat)

print('nfft_inverse')

h_hat = nfft_inverse(x, f, len(x), L = 10)

print('10 iterations: ', np.mean((numpy.sin(10 * 2 * numpy.pi * x) - numpy.real(h_hat))**2))

h_hat = nfft_inverse(x, f, len(x), L = 100)

print('100 iterations: ', np.mean((numpy.sin(10 * 2 * numpy.pi * x) - numpy.real(h_hat))**2))

h_hat = nfft_inverse(x, f, len(x), L = 1000)

print('1000 iterations: ', np.mean((numpy.sin(10 * 2 * numpy.pi * x) - numpy.real(h_hat))**2))

print('nfft_gradient_descent')

h_hat = nfft_gradient_descent(x, f, len(x), L = 10)

print('10 iterations: ', np.mean((numpy.sin(10 * 2 * numpy.pi * x) - numpy.real(h_hat))**2))

h_hat = nfft_gradient_descent(x, f, len(x), L = 100)

print('100 iterations: ', np.mean((numpy.sin(10 * 2 * numpy.pi * x) - numpy.real(h_hat))**2))

h_hat = nfft_gradient_descent(x, f, len(x), L = 1000)

print('1000 iterations: ', np.mean((numpy.sin(10 * 2 * numpy.pi * x) - numpy.real(h_hat))**2))

#Reconstruct the original at a spaced grid based on nfft result using ifft

f_recon = - numpy.fft.fftshift(numpy.fft.ifft(numpy.fft.ifftshift(f_k))) / (N / N2)

x_recon = k / N;

print('using IFFT: ', np.mean((numpy.sin(10 * 2 * numpy.pi * x_recon) - numpy.real(f_recon))**2))

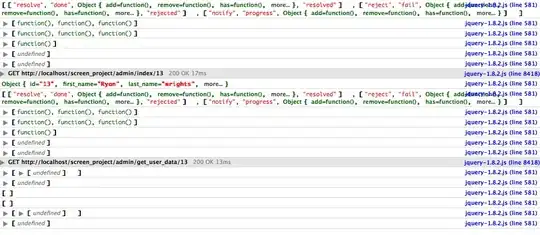

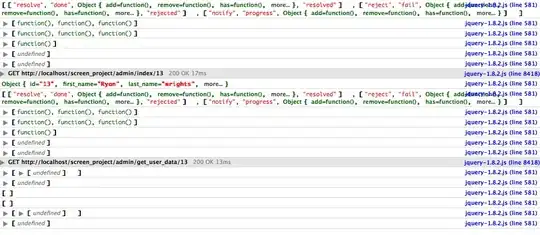

Results:

nfft_inverse

10 iterations: 0.0798988590351581

100 iterations: 0.05136853850272318

1000 iterations: 0.037316315280700896

nfft_gradient_descent

10 iterations: 0.08832834348902704

100 iterations: 0.05901599049633016

1000 iterations: 0.043921864589484

using IFFT: 0.49044932854606377

Another way to view is

plt.plot(numpy.sin(10 * 2 * numpy.pi * x_recon), numpy.real(f_recon), '.', label='ifft')

plt.plot(numpy.sin(10 * 2 * numpy.pi * x), numpy.real(nfft_gradient_descent(x, f, len(x), L = 5)), '.', label='gradient descent L=5')

plt.plot(numpy.sin(10 * 2 * numpy.pi * x), numpy.real(nfft_inverse(x, f, len(x), L = 5)), '.', label='nfft_inverse L=5')

plt.plot(numpy.sin(10 * 2 * numpy.pi * x), np.real(f_hat), '.', label='original')

plt.legend()

Even though the IFFT matrix is better conditioned, it gives results in a worse reconstruction of the signal. Also from the last plot it becomes more noticeable that there is a slight attenuation. Probably due to a systematic energy leak to the imaginary part (an error in my code??). Just a quick test, multiplying it by 1.3 gives better results