Here's one possible solution. This is in Python, but it should be clear enough for a Java port. We will apply a method called gained division. The idea is that you try to build a model of the background and then weight each input pixel by that model. The output gain should be relatively constant during most of the image. This will get rid of most of the background color variation. We can use a morphological chain to clean the result a little bit, let's see the code:

# imports:

import cv2

import numpy as np

# OCR imports:

from PIL import Image

import pyocr

import pyocr.builders

# image path

path = "D://opencvImages//"

fileName = "c552h.png"

# Reading an image in default mode:

inputImage = cv2.imread(path + fileName)

# Get local maximum:

kernelSize = 5

maxKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (kernelSize, kernelSize))

localMax = cv2.morphologyEx(inputImage, cv2.MORPH_CLOSE, maxKernel, None, None, 1, cv2.BORDER_REFLECT101)

# Perform gain division

gainDivision = np.where(localMax == 0, 0, (inputImage/localMax))

# Clip the values to [0,255]

gainDivision = np.clip((255 * gainDivision), 0, 255)

# Convert the mat type from float to uint8:

gainDivision = gainDivision.astype("uint8")

The first step is to apply gain division, the operations you need are straightforward: a morphological closing with a big rectangular structuring element and some data type conversions, be careful with the latter ones. This is the image you should see after the method is applied:

Very cool, the background is almost gone. Let's get a binary image using Otsu's Thresholding:

# Convert RGB to grayscale:

grayscaleImage = cv2.cvtColor(gainDivision, cv2.COLOR_BGR2GRAY)

# Get binary image via Otsu:

_, binaryImage = cv2.threshold(grayscaleImage, 0, 255, cv2.THRESH_BINARY_INV + cv2.THRESH_OTSU)

This is the binary image:

We have a nice image of the edges of the text. We can get a black-background and white-text if we Flood-Fill the background with white. However, we should be careful of the characters, because if a character is broken, the Flood-Fill operation will erase it. Let's first assure our characters are closed by applying a morphological closing:

# Set kernel (structuring element) size:

kernelSize = 3

# Set morph operation iterations:

opIterations = 1

# Get the structuring element:

morphKernel = cv2.getStructuringElement(cv2.MORPH_RECT, (kernelSize, kernelSize))

# Perform closing:

binaryImage = cv2.morphologyEx( binaryImage, cv2.MORPH_CLOSE, morphKernel, None, None, opIterations, cv2.BORDER_REFLECT101 )

This is the resulting image:

As you see, the edges are a lot stronger and, most importantly, are closed. Now, we can Flood-Fill the background with white color. Here, the Flood-Fill seed point is located at the image origin (x = 0, y = 0):

# Flood fill (white + black):

cv2.floodFill(binaryImage, mask=None, seedPoint=(int(0), int(0)), newVal=(255))

We get this image:

We are almost there. As you can see, the holes inside of some characters (e.g., the "a", "d" and "o") are not filled - this can produce noise to the OCR. Let's try to fill them. We can exploit the fact that these holes are all children of a parent contour. We can isolate the children contour and, again, apply a Flood-Fill to fill them. But first, do not forget to invert the image:

# Invert image so target blobs are colored in white:

binaryImage = 255 - binaryImage

# Find the blobs on the binary image:

contours, hierarchy = cv2.findContours(binaryImage, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE)

# Process the contours:

for i, c in enumerate(contours):

# Get contour hierarchy:

currentHierarchy = hierarchy[0][i][3]

# Look only for children contours (the holes):

if currentHierarchy != -1:

# Get the contour bounding rectangle:

boundRect = cv2.boundingRect(c)

# Get the dimensions of the bounding rect:

rectX = boundRect[0]

rectY = boundRect[1]

rectWidth = boundRect[2]

rectHeight = boundRect[3]

# Get the center of the contour the will act as

# seed point to the Flood-Filling:

fx = rectX + 0.5 * rectWidth

fy = rectY + 0.5 * rectHeight

# Fill the hole:

cv2.floodFill(binaryImage, mask=None, seedPoint=(int(fx), int(fy)), newVal=(0))

# Write result to disk:

cv2.imwrite("text.png", binaryImage, [cv2.IMWRITE_PNG_COMPRESSION, 0])

This is the resulting mask:

Cool, let's apply the OCR. I'm using pyocr:

txt = tool.image_to_string(

Image.open("text.png"),

lang=lang,

builder=pyocr.builders.TextBuilder()

)

print(txt)

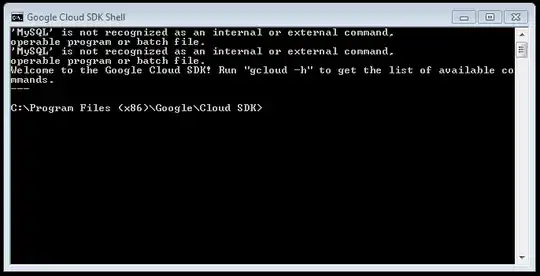

The output:

Landorus