I'm running n trials on a Keras model with k features, after which I apply SHAPs DeepExplainer to the model on each trial. All the data is the same, but it is randomly split between the training and testing sets. I'm trying to figure out the best way to combine the model outputs, whether it be directly by adding the Shapley values for each trial, feature by feature, and then averaging - or by scaling the Shapley values output each trial first and then adding them and averaging.

My initial thought was that, as the "baseline is always relative based on the average of all predictions" (from here), the overall average would be skewed and there might be a better way of combining the data. Though I wonder if, despite the different samples in the train/test split and a different relative "baseline" for each model, if averaging over many models would give a final averaged model should have as much interpretation value as a single model. Should this be the case?

However, would scaling the features per model offer any benefits: Again from here I can (save for the caveats) scale a features Shapley values for a single observation in a model. It seems then that I should be able to scale each of the features Shapley values after summing over all observations, over each bin such that all Shapley values for each feature sum to 1. If this is the case, that I can scale by features within the model can I average the models this way? I am thinking a benefit of this is that all models will then have equal weight since the features are scaled within each. Is this a valid approach, and if so, does it offer any benefit over adding all the Shapley values, feature by feature together over all models?

To be clear on what mean concerning the bins, they are the the lists returned from the explainer, equal to the number of classifications:

explainer = shap.DeepExplainer(model, X_train)

ShapleyBinVals = explainer.shap_values(X_test)

Bin = ShapleyBinVals[n]

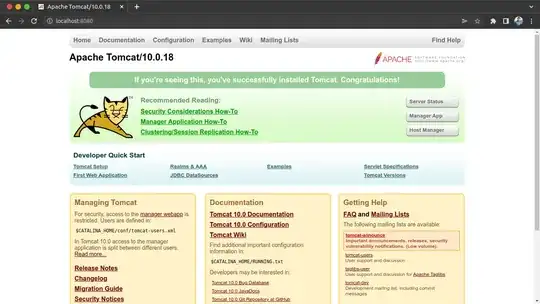

where n is the number of output classifications. Here's a bar plot of the scaled output:

Notice that for each feature e.g. PSWQ_2 the y-value is a percentage and the sum of percentages over all bins is 1.