Some accuracy can be lost by using IOPs. Cassandra has a lot of iops overhead. On reads, cassandra can be reading from multiple sstables. Cassandra also performs background compaction and repair which consumes iops. This is not factor in Amazon Keyspaces. Additionally, Keyspaces scales up and down based on utilization. Taking the average at a point in time will only provide you with a single dimension of cost. You need to take an average that represents a large period of time to cover for peaks and valleys of your workload. Workloads tend to look like sine or cosine waves instead of a flat line.

Gathering the following metrics will help provide more accurate cost estimates.

- Results of the Average row size report(below)

- Table live space in GBs divided by replication factor

- Average writes per second over extended period

- Average reads per second over extended period

Storage size

Table live space in GBs

This method uses Apache Cassandra sizing statistics to determine the data size in Amazon Keyspaces. Apache Cassandra exposes storage metrics via Java Management Extensions (JMX). You can capture these metrics by using third-party monitoring tools such as DataStax OpsCenter, Datadog, or Grafana.

Capture the table live space from the cassandra.live_disk_space_used metric. Take the LiveTableSize and divide it by the replication factor of your data (most likely 3) to get an estimate on Keyspaces storage size. Keyspaces replicates data three times in multiple AWS Availability Zones automatically, but pricing is based on the size of a single replica.

Table live space is 5TB and have replication factor of 3. For the us-east-1 you would use the following formula

(Table live space in GB / Replication Factor) * region storage price per gb

5000 / 3 * 0.3 = 500$ per month.

Collect the Row Size

Results of the Average row size report

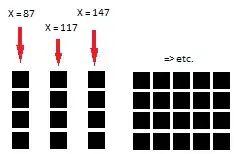

Use the following script to collect row size metrics for your tables. The script exports table data from Apache Cassandra by using cqlsh and then uses awk to calculate the min, max, average, and standard deviation of row size over a configurable sample set of table data. Update the username, password, keyspace name, and table name placeholders with your cluster and table information. You can use dev and test environments if they contain similar data.

https://github.com/aws-samples/amazon-keyspaces-toolkit/blob/master/bin/row-size-sampler.sh

./row-size-sampler.sh YOURHOST 9042 -u "sampleuser" -p "samplepass"

The output will be used in the request unit calculation below. If your model uses large blobs then divide the average size by 2 because cassandra returns a hex value character representation.

Read/write request metrics

Average writes per second/Total writes per month

Average reads per second/Total reads per month

Capturing the read and write request rate of your tables will help determine capacity and scaling requirements for your Amazon Keyspaces tables.

Keyspaces is serverless, and you pay for only what you use. The price of Keyspaces read/write throughput is based on the number and size of requests.

To gather the most accurate utilization metrics from your existing Cassandra cluster, you will capture the average requests per second (RPS) for coordinator-level read and write operations. Take an average over an extended period of time for a table to capture peaks and valleys of workload.

average write request per second over two weeks = 200 writes per second

average read request per second over two weeks = 100 read request per second

LOCAL_QUORUM READS

=READ REQUEST PER SEC * ROUNDUP(ROW SIZE Bytes / 4096) * RCU per hour price * HOURS PER DAY * DAYS PER MONTH

200 * (900 bytes / 4096) * 0.00015 * 24 * 30.41 = 27$ per month

LOCAL_ONE READS

Using eventual consistency reads can save you half the cost on your read workload.

=READ REQUEST PER SEC * ROUNDUP(ROW SIZE Bytes / 8192) * RCU per hour price * HOURS PER DAY * DAYS PER MONTH

200 * (900 bytes / 4096) * 0.00015 * 24 * 30.41 = 14$ per month

LOCAL_QUORUM WRITES

=WRITE REQUEST PER SEC * ROUNDUP(ROW SIZE Bytes / 1024) * RCU per hour price * HOURS PER DAY * DAYS PER MONTH

100 * (900 bytes / 4096) * 0.00075 * 24 * 30.41 = 68$ per month

Storage 500$ per month

Eventual Consistent Reads 14$ per month

Writes 68$ per month

Total: 592 per month

To further reduce cost I may look use client side compression on writes for large blob data or if I have many small rows, I may use collections to fit more data in a single row.

Check out the pricing page for the most up-to-date information.