I have a code that uses ProcessPoolExecutor to process multiple tables.

Now some of the tables are really light so processing them is quite simple, but for some of the tables the code really struggles and the cpu can be at 99% for a while.

I'm already using sleep whenever I can, and even lowered the amount of workers. But none of it helped. So I wonder if maybe using nice could help me?

Sadly that's all I can share because the code is quite long.

with ProcessPoolExecutor(max_workers=NUM_OF_PROCESS_WORKERS) as process_executor:

process_sstables_per_node(env_tables_dict, nodes, process_executor)

def process_sstables_per_node(env_tables_dict, nodes, process_executor):

for table_type in TABLES_COLUMNS_MAPPER.keys():

for node in nodes:

key = os.path.join(node, table_type)

env_tables_dict[key] = process_executor.submit(handle_sstable_group_files_per_node, node, table_type)

I'm asking because when i call for the the results, I get a log that all of my sub processes stopped working in the middle. Example:

Traceback (most recent call last):

File "main.py", line 49, in main

future.result(timeout=RESULT_TIMEOUT * TIME_CONVERTOR * TIME_CONVERTOR)

File "/usr/lib/python3.6/concurrent/futures/_base.py", line 425, in result

return self.__get_result()

File "/usr/lib/python3.6/concurrent/futures/_base.py", line 384, in __get_result

raise self._exception

concurrent.futures.process.BrokenProcessPool: A process in the process pool was terminated abruptly while the future was running or pending.

I'm using Ubuntu 18.04.3 LTS.

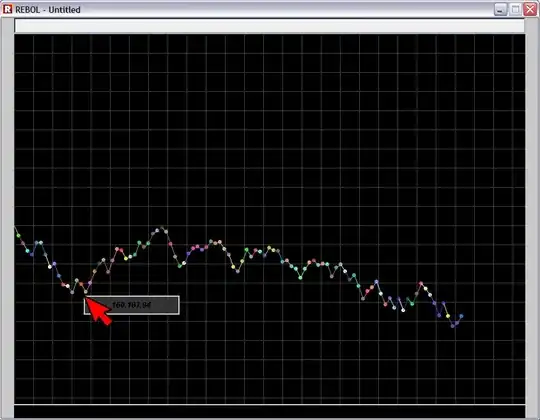

My OOM error from the command dmesg -T | egrep -i 'killed process'