How to insert multiple table values into each table?

Using logstash, I want to put multiple tables as elasticsearch.

I used logstash several times using jdbc

but only one value is saved in one table.

I tried to answer the stackoverflow, but I couldn't solve it.

-> multiple inputs on logstash jdbc

This is my confile code.

This code is the code that I executed by myself.

input {

jdbc {

jdbc_driver_library => "/usr/share/java/mysql-connector-java-8.0.23.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://localhost:3306/db_name?useSSL=false&user=root&password=1234"

jdbc_user => "root"

jdbc_password => "1234"

schedule => "* * * * *"

statement => "select * from table_name1"

tracking_column => "table_name1"

use_column_value => true

clean_run => true

}

jdbc {

jdbc_driver_library => "/usr/share/java/mysql-connector-java-8.0.23.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://localhost:3306/db_name?useSSL=false&user=root&password=1234"

jdbc_user => "root"

jdbc_password => "1234"

schedule => "* * * * *"

statement => "select * from table_name2"

tracking_column => "table_name2"

use_column_value => true

clean_run => true

}

jdbc {

jdbc_driver_library => "/usr/share/java/mysql-connector-java-8.0.23.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_connection_string => "jdbc:mysql://localhost:3306/db_name?useSSL=false&user=root&password=1234"

jdbc_user => "root"

jdbc_password => "1234"

schedule => "* * * * *"

statement => "select * from table_name3"

tracking_column => "table_name3"

use_column_value => true

clean_run => true

}

}

output {

elasticsearch {

hosts => "localhost:9200"

index => "aws_05181830_2"

document_type => "%{type}"

document_id => "{%[@metadata][document_id]}"

}

stdout {

codec => rubydebug

}

}

problem

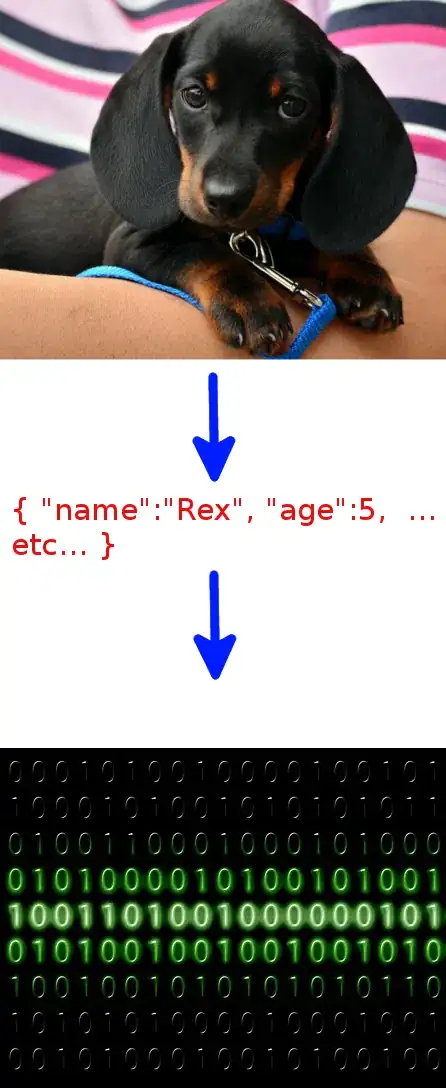

1. If you look at the picture, save only one value in one table

2. When a new table comes, the existing table value disappears.

My golas

How to save properly without duplicate data in each table?