Intro:

Some of you may have noticed that something has broken in relation to the querySelectorAll method of MSHTML.HTMLDocument from MSHTML.Dll (via a Microsoft HTML Document Library reference). This, I believe, has happened in the last month. It may not affect all users and I will update this Q&A as I get more info on which versions etc are affected. Please feel free to comment below with your set-up and whether working or not for both late-bound and early-bound (as per code in answer)

Accessing DispStaticNodeList methods:

Traditionally, at least in my experience, it has been the norm to hold a reference to the DispStaticNodeList, which is what querySelectorAll returns, in a generic late-bound Object type:

E.g.

Dim nodeList1 As Object

Set nodeList1 = html.querySelectorAll("a")

where html is an instance of MSHTML.HTMLDocument.

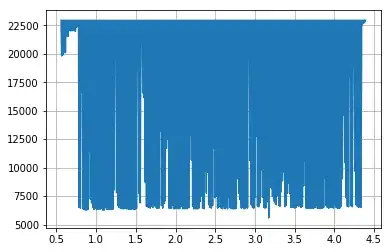

As you can see from the Locals window, you get the expected nodeList shown:

You could then access the list of the document's elements, that match the specified group of selectors, with .item(index), and get the number of items matched with .Length. E.g.

Debug.Print nodeList1.item(0).innerText

Debug.Print nodeList1.Length

What happens now?

Attempts to access the methods, via late bound Object, and its underlying interfaces, lead to either an Object required, when using the .item() method call, or Null when querying the .Length(). E.g.

nodeList1.item(0).innertext ' => Run-time error '424': Object required

Debug.Print nodeList1.Length ' => Null

This happens when you hold a reference through assigning to a variable.

What you can do:

You can use With and work off html, avoiding the Object class

With html.querySelectorAll("a")

For i = 0 To .Length - 1

Debug.Print .Item(i).innerText

Next

End With

So, I think the problem is very much about the Object data type and its underlying interfaces. And possibly, something about this has broken in relation to MSHTML, and most likely, the now no longer supported, Internet Explorer, which sits in the background:

However, this is not desirable, as you parse, and re-parse, the same HTML, during the loop, losing much of the efficiency that its gained by choosing css selectors over traditional methods e.g. getElementsByClassName. Those traditional methods remain intact.

Why do some of us care?

Modern browsers (and even IE8 onwards) support faster node matching through use of css selectors. It seems reasonable to assume that this carried over into the DOM parsers with MSHTML.HTMLDocument. So, you have faster matching, combined with more expressive and concise syntax (none of those long chained method calls e.g. getElementsByClassName("abc")(0).getElementsByTagName("def")(0).....), the ability to return more desired nodes, without repeated calls (in the prior example you will only get def as children of the first element with class abc, rather than all children, with tag def, of all elements with class abc, which you would get with querySelectorAll(".abc def"). And, you lose the flexibility to specify much more complex and specific patterns for node matching e.g. querySelectorAll(".abc > def + #ghi). For those interested, you can read more about those selectors on MSDN.

Question:

So, how does one avoid re-parsing, and hold the reference to the returned list of matched nodes? I have found nothing on the internet, despite quite a bit of searching, that documents this recent change in behaviour. It is also a very recent change and that likely only affects a small user base.

I hope the above satisfies the need to demonstrate research into the problem.

My set-up:

OS Name Microsoft Windows 10 Pro

Version 10.0.19042 Build 19042

System Type x64-based PC

Microsoft® Excel® 2019 MSO (16.0.13929.20206) 32-bit (Microsoft Office Professional Plus)

Version 2104 Build 13929.20373

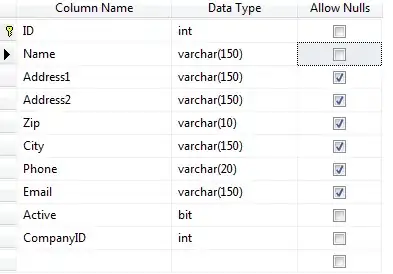

mshtml.dll info as per image

Not affected (TBD):

- Office Professional plus 2013. Win 7, 32 bit, MSHTML.dll 11.0.9600.19597