argoproj/argocd:v1.8.7

Have a helm chart (1 with ingress, 1 with deployment/service/cm). It has sync policies of automated (prune and self-heal). When trying to delete them from the argocd dashboard, they are getting deleted (no more on the k8s cluster), however the status on the dashboard has been stuck at Deleting.

If I try to click sync, it shows -> Unable to deploy revision: application is deleting. Any ideas why it's stuck on Deleting status even though all resources have been deleted already ? Is there a way to refresh the status in the dashboard to reflect that actual state?

Thanks!

================

Update:

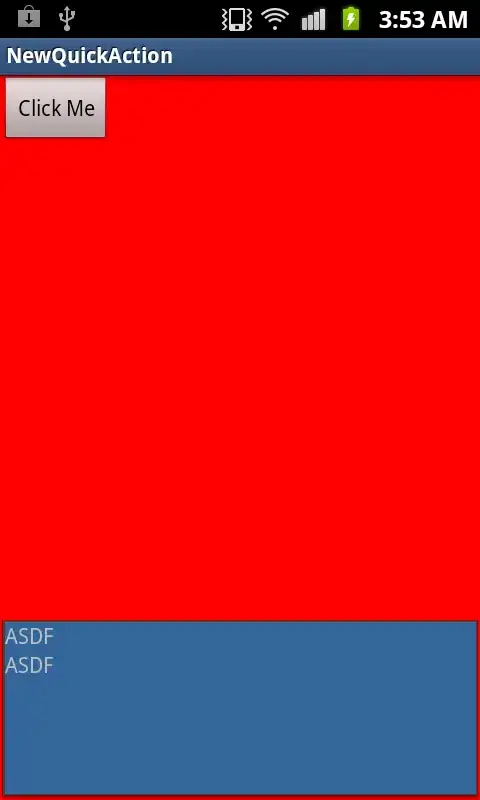

After doing cascade delete, this is the screenshot (i've removed the app names that why it's white for some part)

Doing kubectl get all -A shows all resources isn't present anymore (e.g even the cm, svc, deploy, etc)