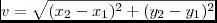

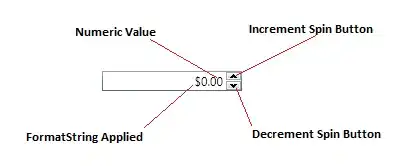

I have a depth image from an ifm 3D camera which utilizes a time-of-flight concept to capture depth images. The camera comes with a software which showcases the image as seen below:

I could extract the depth data from the camera and have been trying to recreate their representation, but I've been unsuccessful. No matter how I try to normalize the data range or change the data type format, I always end up with an image that is "darker" in the center and gets lighter as it moves away. The color range doesn't match either for some reason. Here's the main code I tried:

gray_dist = cv2.imread(dist_path, cv2.IMREAD_ANYDEPTH)

# cv2.normalize(dist, dist, 0, 65535, cv2.NORM_MINMAX)

# cv2.normalize(dist, dist, 0, 255, cv2.NORM_MINMAX)

cv2.normalize(dist, dist, 1440, 1580, cv2.NORM_MINMAX)

dist = dist.astype(np.uint8)

dist = cv2.applyColorMap(dist, cv2.COLORMAP_HSV)

# dist = cv2.cvtColor(dist, cv2.COLOR_HSV2BGR)

cv2.imshow("out", dist)

cv2.waitKey(0)

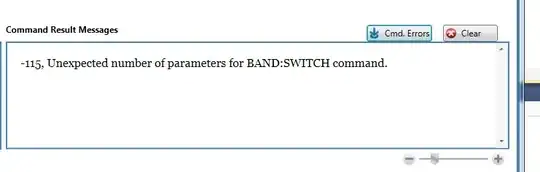

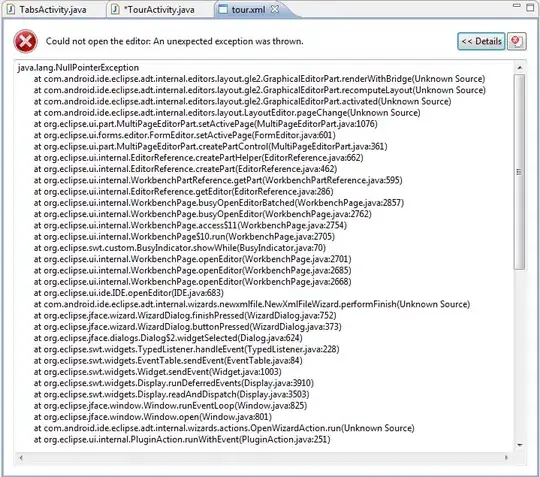

Which gets me the following image:

I've tried other combinations and also to write my own normalization and colorize functions but I get the same result. I'm not sure at this point if I'm doing something wrong or if it's a limitation of the openCV window viewer or something else.

I've also uploaded the depth image file in case it's helpful: depth_image

Any help with this would be greatly appreciated.