The above may sound ideal, but I'm trying to predict a step in front - i.e. with a look_back of 1. My code is as follows:

def create_scaled_datasets(data, scaler_transform, train_perc = 0.9):

# Set training size

train_size = int(len(data)*train_perc)

# Reshape for scaler transform

data = data.reshape((-1, 1))

# Scale data to range (-1,1)

data_scaled = scaler_transform.fit_transform(data)

# Reshape again

data_scaled = data_scaled.reshape((-1, 1))

# Split into train and test data keeping time order

train, test = data_scaled[0:train_size + 1, :], data_scaled[train_size:len(data), :]

return train, test

# Instantiate scaler transform

scaler = MinMaxScaler(feature_range=(0, 1))

model.add(LSTM(5, input_shape=(1, 1), activation='tanh', return_sequences=True))

model.add(Dropout(0.1))

model.add(LSTM(12, input_shape=(1, 1), activation='tanh', return_sequences=True))

model.add(Dropout(0.1))

model.add(LSTM(2, input_shape=(1, 1), activation='tanh', return_sequences=False))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

# Create train/test data sets

train, test = create_scaled_datasets(data, scaler)

trainY = []

for i in range(len(train) - 1):

trainY = np.append(trainY, train[i + 1])

train = np.reshape(train, (train.shape[0], 1, train.shape[1]))

plotting_test = test

test = np.reshape(test, (test.shape[0], 1, test.shape[1]))

model.fit(train[:-1], trainY, epochs=150, verbose=0)

testPredict = model.predict(test)

plt.plot(testPredict, 'g')

plt.plot(plotting_test, 'r')

plt.show()

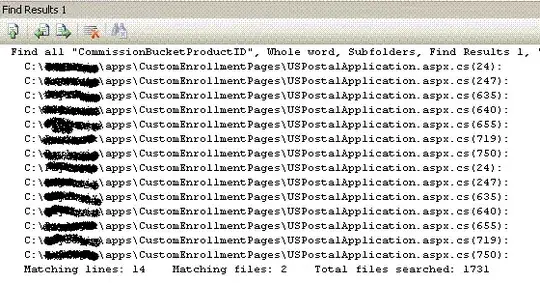

with output plot of:

In essence, what I want to achieve is for the model to predict the next value, and I attempt to do this by training on the actual values as the features, and the labels being the actual values shifted along one (look_back of 1). Then I predict on the test data. As you can see from the plot, the model does a pretty good job, except it doesn't seem to be predicting the future, but instead seems to be predicting the present... I would expect the plot to look similar, except the green line (the predictions) to be shifted one point to the left. I have tried increasing the look_back value, but it seems to always do the same thing, which makes me think I'm training the model wrong, or attempting to predict incorrectly. If I am reading this wrong and the model is indeed doing what I want but I'm interpreting wrong (also very possible) how do I then predict further into the future?