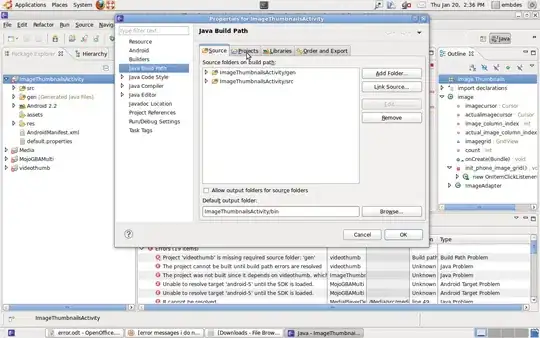

I have created a classification matrix for multi-label classification to evaluate the performance of the MLPClassifier model. The confusion matrix output should be 10x10 but at times I get 8x8 as it doesn't show label values for either 1 or 2 class labels as you can see from the confusion matrix heatmap below the code whenever I run the whole Jupyter notebook. The class labels of true and predicted labels are from 1 to 10 (unordered). Is it because of a code bug or it just depends on the random input samples the test dataset accepts when the data is split into train and test sets? How should I fix this? The implementation of the code looks like this:

from sklearn.metrics import confusion_matrix

cm = confusion_matrix(y_test, y_pred)

print(cm)

str(cm)

Out: [[20 0 0 1 0 5 1 0]

[ 3 0 0 0 0 0 0 0]

[ 1 1 0 1 0 1 0 0]

[ 3 0 0 0 0 3 1 1]

[ 0 0 0 0 0 1 0 0]

[ 3 0 0 1 0 2 1 1]

[ 3 0 0 0 0 0 0 2]

[ 1 0 0 0 0 0 0 1]]

'[[20 0 0 1 0 5 1 0]\n [ 3 0 0 0 0 0 0 0]\n [ 1 1 0 1 0

1 0 0]\n [ 3 0 0 0 0 3 1 1]\n [ 0 0 0 0 0 1 0 0]\n [ 3 0

0 1 0 2 1 1]\n [ 3 0 0 0 0 0 0 2]\n [ 1 0 0 0 0 0 0

1]]'

import matplotlib.pyplot as plt

import seaborn as sns

side_bar = [1,2,3,4,5,6,7,8,9,10]

f, ax = plt.subplots(figsize=(12,12))

sns.heatmap(cm, annot=True, linewidth=.5, linecolor="r", fmt=".0f", ax = ax)

ax.set_xticklabels(side_bar)

ax.set_yticklabels(side_bar)

plt.xlabel('Predicted Label')

plt.ylabel('True Label')

plt.show()