When uploading files, if you choose to use JSON, then you are putting the client in a pickle. You are going to force the client to have to Base64-encode all files. This can be a cumbersome task for clients to do especially if it's browser JS code. Not to mention you increase your payload 33%.

Form-Data?

When posting files, you want to use HTML forms. This is something a browser is very good at.

With that being said, the proper way to do this would be to set up your endpoint to look like this:

// NOTE: This is the same as you have it in your question

public class TestModel

{

public IList<IFormFile> File { get; set; }

public string Name { get; set; }

public int Age { get; set; }

public string Hobbies { get; set; }

}

[HttpPost, Route("testme")]

public IActionResult TestMe([FromForm] TestModel testModel)

{

return Ok();

}

Take note of the [FromForm] parameter.

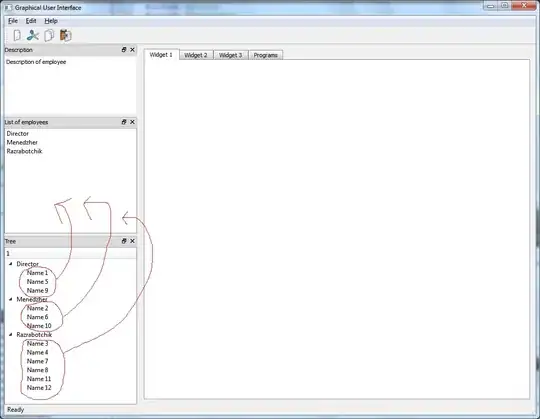

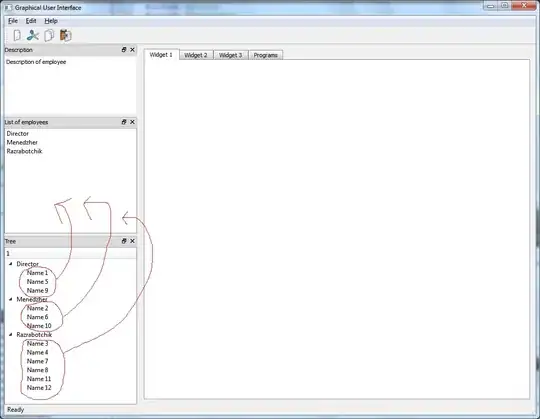

Then set up postman to look like this:

- Set it to

Post.

- Go to the

Body section and set it to Form-Data.

- Add in the parameters. You can test multiple files by adding the same parameter name multiple times.

- Click

Send.

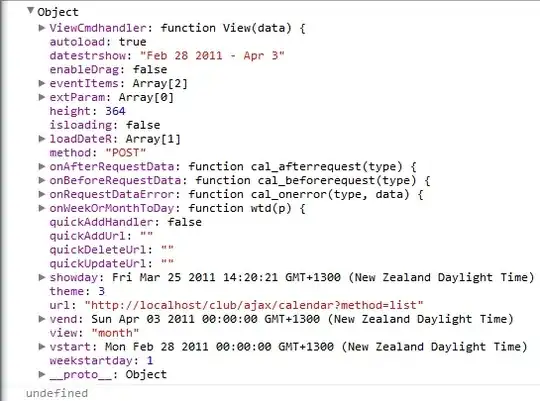

When the endpoint gets hit, it should look like this:

JSON?

Let's say you absolutely must use JSON as the post data. Then your model will look like this:

public class TestModel

{

public IList<byte[]> File { get; set; }

public string Name { get; set; }

public int Age { get; set; }

public string Hobbies { get; set; }

}

You would change your endpoint from [FromForm] to [FromBody], then you will have to convince your front-end devs they must encode all files with Base64 and build a JSON object to send. I don't think you will be super successful with that as it's not optimized nor is it the norm. They may give you strange looks.

Let's run through the scenario:

- User selects 3 files. Each are 100 MB a piece.

- User clicks "submit" and JS now reads 300 MB in to memory.

- JS encodes 300 MB of files to Base64 in the browser. Memory usage has increased to 400 MB.

- JS client builds the JSON payload -- Memory increases again as a new string needs to be created based on the 400 MB of strings.

- Network traffic starts.

- Backend gets the post data. Since it's JSON, ASP.NET Core will deserialize to your model. The framework will have to allocate 400 MB of memory to read in the JSON from the network.

- ASP.NET will deserialize, which also requires the decoding of 400 MB of data, which isn't a light task.

- Your endpoint gets invoked with a ~300MB model in memory.

You will run in to OutOfMemory exceptions at some point. It's almost guaranteed.

Form data is extremely optimized in ASP.NET Core. It will use memory for smaller files, but then dump to disk for larger files. It's impossible to run in to memory issues when using Form data.

Let's run though a Form scenario:

- User selects 3 files. Each are 100 MB a piece.

- User clicks "submit". Browser will now start a request to the back-end and reads in the file to small chunks of memory and send it up chunk-by-chunk. One file at a time. The size of these chunks are optimized based on environment. Will not exceed what is necessary to transfer the data efficiently. Memory usage will be minimal.

- ASP.NET starts reading the data. If the

IFormFile data exceed 5 MB (I believe that's the limit), it will move it from an in-memory stream to a disk stream.

- ASP.NET invokes your endpoint with references to streams that originate from the disk. The size of your model in memory is irrelevant since it's just holding references to disk streams.

PostMan? There isn't a way to do this in PostMan without scripting something, again because it's not normal to do. I'm sure if you google the subject you may find something, but it will be kludgy at best.