this is yet another SSE is slower than normal code! Why? type of question.

I know that there are a bunch of similar questions but they don't seem to match my situation.

I am trying to implement Miller-Rabin primality test with Montgomery Modular Multiplication for fast modulo operations.

I tried to implement it in both scalar and SIMD way and it turns out that the SIMD version was around 10% slower.

that [esp+16] or [esp+12] is pointing to the modulo inverse of N if there's anyone wondering.

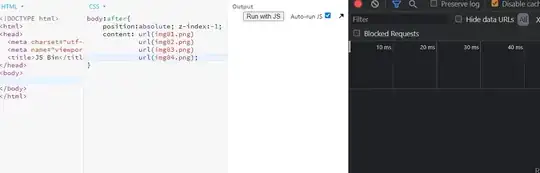

I am really puzzled over the fact that a supposedly 1 Latency 1c Throughput 1uops instruction psrldq takes more than 3 Latency 0.5c Throughput 1uops pmuludq.

Below is the code and the run time analysis on visual studio ran on Ryzen 5 3600.

Any idea on how to improve SIMD code and/or why is it slower than a scalar code is appreciated.

P.S. Seems like the run time analysis is off by one instruction for some reason

EDIT 1: the comment on the image was wrong, I attached a fixed version below:

;------------------------------------------

; Calculate base^d mod x

;

; eax = 1

; esi = x

; edi = bases[eax]

; ebp = d

; while d do

; if d & 1 then eax = (eax * edi) mod x

; edi = (edi*edi) mod x

; d >>= 1

; end

;------------------------------------------

Scalar code:

LOOP_MODEXP:

push eax

test ebp, 1

jz @F

mul edi

mov ecx, edx

imul eax, DWORD PTR [esp+16]

mul esi

xor ebx, ebx

sub ecx, edx

cmovs ebx, esi

add ecx, ebx

mov DWORD PTR [esp], ecx

@@:

mov edx, edi

mulx ecx, edx, edi

imul edx, DWORD PTR [esp+16]

mulx eax, ebx, esi

xor ebx, ebx

sub ecx, eax

cmovs ebx, esi

add ecx, ebx

mov edi, ecx

pop eax

shr ebp, 1

jnz LOOP_MODEXP

SIMD code

movd xmm2, DWORD PTR [esp+12]

movd xmm3, esi

pshufd xmm2, xmm2, 0

pshufd xmm3, xmm3, 0

movd xmm1, edi

pshufd xmm1, xmm1, 0

movdqa xmm0, xmm1

pinsrd xmm0, eax, 2

LOOP_MODEXP:

movdqa xmm4, xmm0

pmuludq xmm0, xmm1

movdqa xmm1, xmm0

pmuludq xmm0, xmm2

pmuludq xmm0, xmm3

psubd xmm1, xmm0

psrldq xmm1, 4

pxor xmm0, xmm0

pcmpgtd xmm0, xmm1

blendvps xmm0, xmm3, xmm0

paddd xmm0, xmm1

movddup xmm1, xmm0

test ebp, 1

jnz @F

blendps xmm0, xmm4, 4

@@:

shr ebp, 1

jnz LOOP_MODEXP

pextrd eax, xmm0, 2