I am trying to make a good partition of the data in pyspark according to specific ID.

from pyspark.sql import HiveContext

mysqlContext = HiveContext(sc)

query_1= f"SELECT /*+ COALESCE(100) */ {fields_Str} FROM database_customer.customer_table where customer_id in ({customer_id_Str}) "

df_1 = mysqlContext.sql(query_1)

where fields_str contains the fields used. and customer_id_Str contains 500 unique customer_id

When I want to get the number of partition using this line of code:

df_1.rdd.getNumPartitions()

I get 100 which is good. But when I run the following code

df_1 = df_1.repartition(500,"customer_id")

df_1.show(30,truncate=False)

It will take about 30 minutes of processing showing the following in the jobs page:

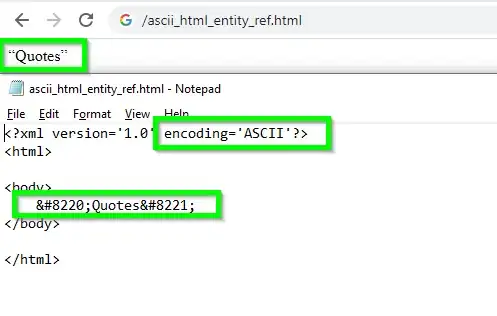

It shows that there is 6020 jobs running. and it takes 30 minutes.

Even after the partition is done, when I re run df_1.show(30,truncate=False) it will take the same time.

Any idea why?