Based on the guide Implementing PCA in Python, by Sebastian Raschka I am building the PCA algorithm from scratch for my research purpose. The class definition is:

import numpy as np

class PCA(object):

"""Dimension Reduction using Principal Component Analysis (PCA)

It is the procces of computing principal components which explains the

maximum variation of the dataset using fewer components.

:type n_components: int, optional

:param n_components: Number of components to consider, if not set then

`n_components = min(n_samples, n_features)`, where

`n_samples` is the number of samples, and

`n_features` is the number of features (i.e.,

dimension of the dataset).

Attributes

==========

:type covariance_: np.ndarray

:param covariance_: Coviarance Matrix

:type eig_vals_: np.ndarray

:param eig_vals_: Calculated Eigen Values

:type eig_vecs_: np.ndarray

:param eig_vecs_: Calculated Eigen Vectors

:type explained_variance_: np.ndarray

:param explained_variance_: Explained Variance of Each Principal Components

:type cum_explained_variance_: np.ndarray

:param cum_explained_variance_: Cumulative Explained Variables

"""

def __init__(self, n_components : int = None):

"""Default Constructor for Initialization"""

self.n_components = n_components

def fit_transform(self, X : np.ndarray):

"""Fit the PCA algorithm into the Dataset"""

if not self.n_components:

self.n_components = min(X.shape)

self.covariance_ = np.cov(X.T)

# calculate eigens

self.eig_vals_, self.eig_vecs_ = np.linalg.eig(self.covariance_)

# explained variance

_tot_eig_vals = sum(self.eig_vals_)

self.explained_variance_ = np.array([(i / _tot_eig_vals) * 100 for i in sorted(self.eig_vals_, reverse = True)])

self.cum_explained_variance_ = np.cumsum(self.explained_variance_)

# define `W` as `d x k`-dimension

self.W_ = self.eig_vecs_[:, :self.n_components]

print(X.shape, self.W_.shape)

return X.dot(self.W_)

Consider the iris-dataset as a test case, PCA is achieved and visualized as follows:

import numpy as np

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

# loading iris data, and normalize

from sklearn.datasets import load_iris

iris = load_iris()

from sklearn.preprocessing import MinMaxScaler

X, y = iris.data, iris.target

X = MinMaxScaler().fit_transform(X)

# using the PCA function (defined above)

# to fit_transform the X value

# naming the PCA object as dPCA (d = defined)

dPCA = PCA()

principalComponents = dPCA.fit_transform(X)

# creating a pandas dataframe for the principal components

# and visualize the data using scatter plot

PCAResult = pd.DataFrame(principalComponents, columns = [f"PCA-{i}" for i in range(1, dPCA.n_components + 1)])

PCAResult["target"] = y # possible as original order does not change

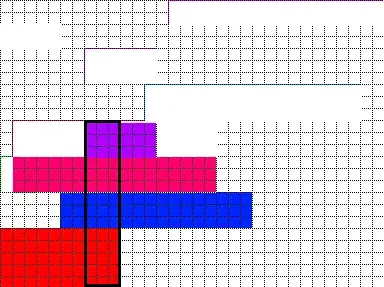

sns.scatterplot(x = "PCA-1", y = "PCA-2", data = PCAResult, hue = "target", s = 50)

plt.show()

Now, I wanted to verify the output, for which I used sklearn library, and the output is as follows:

from sklearn.decomposition import PCA # note the same name

sPCA = PCA() # consider all the components

principalComponents_ = sPCA.fit_transform(X)

PCAResult_ = pd.DataFrame(principalComponents_, columns = [f"PCA-{i}" for i in range(1, 5)])

PCAResult_["target"] = y # possible as original order does not change

sns.scatterplot(x = "PCA-1", y = "PCA-2", data = PCAResult_, hue = "target", s = 50)

plt.show()

I don't understand why the output is oriented differently, with a minor different value. I studied numerous codes [1, 2, 3], all of which have the same issue. My questions:

- What is different in

sklearn, that the plot is different? I've tried with a different dataset too - the same problem. - Is there a way to fix this issue?

I was not able to study the sklearn.decompose.PCA algorithm, as I am new to OOPs concept with python.

Output in the blog post by Sebastian Raschka also has a minor variation in output. Figure below: