We have a case where the number of Kafka partitions is very low. This makes reading data from Kafka very slow. We wish to increase parallelism when reading from Kafka in Flink without changing the number of Kafka partitions.

Our situation is like case 2 in this answer. We want both task 1 and 2 to have work to do without changing the number of partitions in broker 1. And we want to do this while reading data, not after (so .rebalance() won't help us much).

In Structured Streaming, we are able to make use of the minPartitions option (see https://spark.apache.org/docs/latest/structured-streaming-kafka-integration.html), which increases the number of Kafka partitions read by splitting the offset range and reading the sub-ranges in parallel.

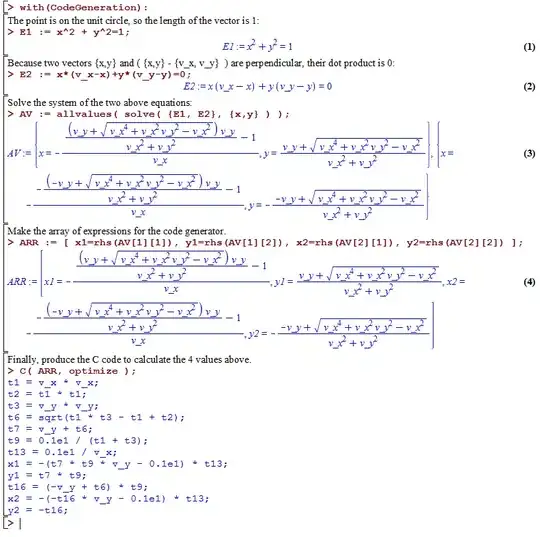

In Flink, we didn't find a way to set end offset for the legacy FlinkKafkaConsumer, and although we found a setBounded() method for the new KafkaSource that can set end offset, it doesn't allow doing so in parallel.

Is something like minPartitions possible in Flink? E.g., increase the number of Kafka partitions that are being read by splitting the offset range?