I am working on some programming problems where I have to transition probabilities between standard space and log-space. For this purpose, I am trying to find out the maximum absolute error for the floating-point error in R for the computation log(exp(...)) where the input is a log-probability (i.e., a non-positive number).

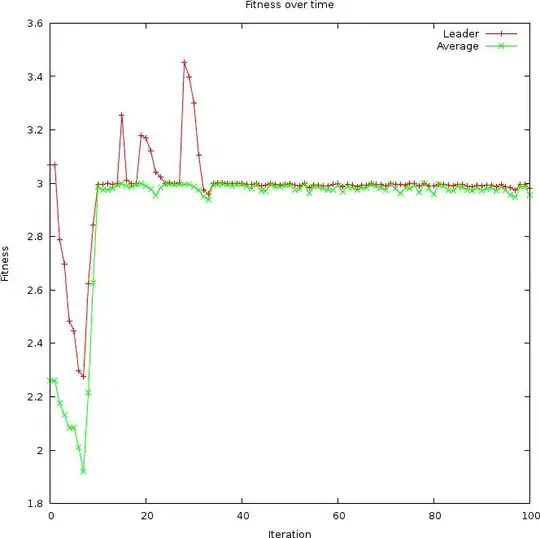

At the moment I have computed the answer by using a grid search (see code and plot below), but I'm not sure if the value I've computed is correct. (I checked some other ranges but the range shown in the plot seems to get the maximum absolute error.)

#Set function for computing floating-point error of log(exp(...))

fp.error <- function(x) { abs(log(exp(x)) - x) }

#Compute and plot floating-point error over a grid of non-negative values

xx <- -(0:20000/10000)

ff <- fp.error(xx)

plot(xx, ff, col = '#0000FF10',

main = 'Error in computation of log(exp(...))',

xlab = 'x', ylab = 'Floating-Point Error')

#Compute maximum floating-point error

fp.error.max <- max(ff)

fp.error.max

[1] 1.110223e-16

From this analysis, my estimated maximum absolute error is half the size of .Machine$double.eps (which is 2.220446e-16). I am not sure if there is a theoretical reason for this, or if I am getting the wrong answer.

Question: Is there any way to determine if this is really the maximum floating-point error for this computation? Is there any theoretical way to compute the maximum, or is this kind of grid-search method sufficient?