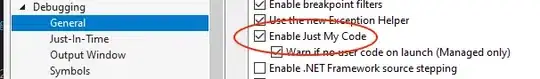

I'm working on extracting text on images, that are similar to the one shown below: Warehouse boxes with all kinds of different labels. Images often have poor angles.

My code:

im = cv2.imread('1.jpg')

config = ('-l eng --oem 1 --psm 3')

text = pytesseract.image_to_string(im, config=config)

text_list = text.split('\n')

# remove blanks of varying sizes so that only words are returned

space_to_empty = [x.strip() for x in text_list]

space_clean_list = [x for x in space_to_empty if x]

print(space_clean_list)

For example, that image

returns an output of

['L2 Sy', "////’7/'7///////////////"]

on all variations of --oem and --psm values.

Perspective correction for the image

gives a slightly better output (though still poor) of

['R19 159 942 sEMY', 'V/ ////////////////////I////I/////////////']

again, on all variations of --oem and --psm values.

My questions are:

- Why does Tesseract seem to perform so badly on such images with poor perspectives, compared to other alternatives like Vision API and PaddleOCR which are able to extract text fairly well. Is this an issue that can be corrected through some sort of fine-tuning in Tesseract? Or is this a weak point of Tesseract that has to be addressed with preprocessing (such as blurring, threshold, etc)? If that is the case, the alternate solutions above seem better as they do not require such preprocessing.

- Despite changing the values for

--oemand--psmas shown here, the output stays the same. Is this expected?