I am running a Spring boot Java application using default HikariCP as data source:

- Hikari version - 3.4.5

- Spring Boot version - 2.4.5 running in AWS EKS

- JDBI version - 3.9.1

- DB - AWS RDS Postgres

My Spring application becomes unresponsive as it loses all the connections in the Hikari Pool. This does not happen deterministically, but once it starts happening, the only recovery possible is through restart.

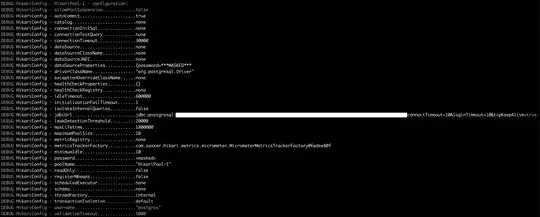

Hikari Config:

The pool keeps losing the connections and fails to connect back, leaving total=0 connections.

06-23 08:14:33.242 [HikariPool-1 housekeeper] DEBUG HikariPool - HikariPool-1 - Pool stats (total=10, active=1, idle=9, waiting=0)

06-23 08:15:07.189 [HikariPool-1 housekeeper] DEBUG HikariPool - HikariPool-1 - Pool stats (total=10, active=1, idle=9, waiting=0)

06-23 08:15:07.190 [HikariPool-1 housekeeper] DEBUG HikariPool - HikariPool-1 - Fill pool skipped, pool is at sufficient level.

06-23 08:15:32.785 [HikariPool-1 connection closer] DEBUG PoolBase - HikariPool-1 - Closing connection org.postgresql.jdbc.PgConnection@58f866df: (connection was evicted)

06-23 08:15:41.278 [HikariPool-1 housekeeper] DEBUG HikariPool - HikariPool-1 - Pool stats (total=9, active=1, idle=8, waiting=0)

06-23 08:15:49.809 [HikariPool-1 connection adder] DEBUG PoolBase - HikariPool-1 - Failed to create/setup connection: Connection attempt timed out.

06-23 08:15:49.809 [HikariPool-1 connection adder] DEBUG HikariPool - HikariPool-1 - Cannot acquire connection from data source

org.postgresql.util.PSQLException: Connection attempt timed out.

at org.postgresql.Driver$ConnectThread.getResult(Driver.java:422)

at org.postgresql.Driver.connect(Driver.java:267)

at com.zaxxer.hikari.util.DriverDataSource.getConnection(DriverDataSource.java:138)

at com.zaxxer.hikari.pool.PoolBase.newConnection(PoolBase.java:358)

at com.zaxxer.hikari.pool.PoolBase.newPoolEntry(PoolBase.java:206)

at com.zaxxer.hikari.pool.HikariPool.createPoolEntry(HikariPool.java:477)

at com.zaxxer.hikari.pool.HikariPool.access$100(HikariPool.java:71)

at com.zaxxer.hikari.pool.HikariPool$PoolEntryCreator.call(HikariPool.java:725)

at com.zaxxer.hikari.pool.HikariPool$PoolEntryCreator.call(HikariPool.java:711)

at java.base/java.util.concurrent.FutureTask.run(FutureTask.java:264)

at java.base/java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1128)

at java.base/java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:628)

at java.base/java.lang.Thread.run(Thread.java:829)

06-23 08:15:49.810 [HikariPool-1 connection adder] DEBUG HikariPool - HikariPool-1 - Connection add failed, sleeping with backoff: 250ms

06-23 08:15:49.816 [HikariPool-1 connection closer] DEBUG PoolBase - HikariPool-1 - Closing connection org.postgresql.jdbc.PgConnection@7303422e: (connection was evicted)

(After more of these)

06-23 10:23:21.731 [ForkJoinPool-1-worker-7] DEBUG HikariPool - HikariPool-1 - Add connection elided, waiting 2, queue 10

06-23 10:23:55.883 [ForkJoinPool-1-worker-7] DEBUG HikariPool - HikariPool-1 - Timeout failure stats (total=0, active=0, idle=0, waiting=0)

06-23 10:23:55.883 [pool-3-thread-4] DEBUG HikariPool - HikariPool-1 - Timeout failure stats (total=0, active=0, idle=0, waiting=0)

06-23 10:23:55.883 [HikariPool-1 housekeeper] DEBUG HikariPool - HikariPool-1 - Pool stats (total=0, active=0, idle=0, waiting=0)

06-23 10:23:55.883 [HikariPool-1 housekeeper] DEBUG HikariPool - HikariPool-1 - Fill pool skipped, pool is at sufficient level.

FWIW, I've found the logs for leak detection, but they are followed by unleaked logs. The leak threshold is set to 20 seconds.

06-23 08:07:04.506 [HikariPool-1 housekeeper] WARN ProxyLeakTask - Connection leak detection triggered for org.postgresql.jdbc.PgConnection@58f866df on thread ForkJoinPool-1-worker-1, stack trace follows

java.lang.Exception: Apparent connection leak detected

at com.zaxxer.hikari.HikariDataSource.getConnection(HikariDataSource.java:128)

at org.springframework.jdbc.datasource.DataSourceUtils.fetchConnection(DataSourceUtils.java:158)

at org.springframework.jdbc.datasource.DataSourceUtils.doGetConnection(DataSourceUtils.java:116)

at org.springframework.jdbc.datasource.TransactionAwareDataSourceProxy$TransactionAwareInvocationHandler.invoke(TransactionAwareDataSourceProxy.java:223)

at com.sun.proxy.$Proxy119.getAutoCommit(Unknown Source)

at org.jdbi.v3.core.transaction.LocalTransactionHandler.isInTransaction(LocalTransactionHandler.java:118)

at org.jdbi.v3.core.Handle.(Handle.java:76)

at org.jdbi.v3.core.Jdbi.open(Jdbi.java:309)

(Application specific stacktrace)

...

06-23 08:07:04.527 [ForkJoinPool-1-worker-1] INFO ProxyLeakTask - Previously reported leaked connection org.postgresql.jdbc.PgConnection@58f866df on thread ForkJoinPool-1-worker-1 was returned to the pool (unleaked)

When I redeploy the application, it starts up perfectly, and other instances of this application work correctly, so I have ruled out issues on network and RDS side.

My questions:

- Is connection leaks the source of problem? As they are

unleaked, shouldn't Hikari be able to reclaim the connections and create new ones? Is there a way to confirm the root cause? - The leaks don't happen often, but when they do, they start happening for several requests until the app becomes unresponsive. Is there a way to find out why the requests start taking more than 20 seconds?

- What config changes can help with more diagnosis and further, to fix the problem?

Thanks.