I have certain log messages from certain services that are in JSON format; and then this fluentd filter is able to parse that properly. However with this; it discards all other logs from other components whose message field is not proper JSON.

<source>

@type tail

@id in_tail_container_logs

path /var/log/containers/*.log

pos_file /var/log/fluentd-containers.log.pos

tag "#{ENV['FLUENT_CONTAINER_TAIL_TAG'] || 'kubernetes.*'}"

exclude_path "#{ENV['FLUENT_CONTAINER_TAIL_EXCLUDE_PATH'] || use_default}"

read_from_head true

#https://github.com/fluent/fluentd-kubernetes-daemonset/issues/434#issuecomment-752813739

#<parse>

# @type "#{ENV['FLUENT_CONTAINER_TAIL_PARSER_TYPE'] || 'json'}"

# time_format %Y-%m-%dT%H:%M:%S.%NZ

#</parse>

#https://github.com/fluent/fluentd-kubernetes-daemonset/issues/434#issuecomment-831801690

<parse>

@type cri

<parse> # this will parse the neseted feilds properly - like message in JSON; but if mesage is not in json then this is lost

@type json

</parse>

</parse>

#emit_invalid_record_to_error # when nested logging fails, see if we can parse via JSON

#tag backend.application

</source>

But all other messages which do not have proper JSON format are lost;

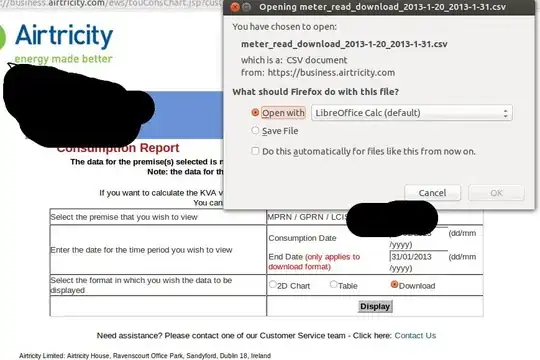

If I comment out the nested parse part inside type cri; then I get all logs; but logs whose messages are in JSON format are not parsed further. Espcially severity field.See last two lines in the screen shot below

<parse>

@type cri

</parse>

To overcome this ; I try to use the LABEL @ERROR, if nested parsing fails for some logs; whose message is not in JSON format- I need to still see the pod name and other details and message as text in Kibana; However with the below config, it is only able to parse logs whose message is proper JSON format

<source>

@type tail

@id in_tail_container_logs

path /var/log/containers/*.log

pos_file /var/log/fluentd-containers.log.pos

tag "#{ENV['FLUENT_CONTAINER_TAIL_TAG'] || 'kubernetes.*'}"

exclude_path "#{ENV['FLUENT_CONTAINER_TAIL_EXCLUDE_PATH'] || use_default}"

read_from_head true

#https://github.com/fluent/fluentd-kubernetes-daemonset/issues/434#issuecomment-752813739

#<parse>

# @type "#{ENV['FLUENT_CONTAINER_TAIL_PARSER_TYPE'] || 'json'}"

# time_format %Y-%m-%dT%H:%M:%S.%NZ

#</parse>

#https://github.com/fluent/fluentd-kubernetes-daemonset/issues/434#issuecomment-831801690

<parse>

@type cri

<parse> # this will parse the neseted feilds properly - like message in JSON; but if mesage is not in json then this is lost

@type json

</parse>

</parse>

#emit_invalid_record_to_error # when nested logging fails, see if we can parse via JSON

#tag backend.application

</source>

<label @ERROR> # when nested logs fail this is not working

<filter **>

@type parser

key_name message

<parse>

@type none

</parse>

</filter>

<match kubernetes.var.log.containers.elasticsearch-kibana-**> #ignore from this container

@type null

</match>

</label>

How do I get logs whose messages are JSON formatted parsed; and whose messages are in text; as is without getting lost ?

Config here (last there commits) https://github.com/alexcpn/grpc_templates.git